Table of Contents

Automated test cases are essential for validating the functionality and reliability of software without manual intervention. Here is the list of typical automated test cases examples:

-

Unit Test: Checks individual units or components of the software for correct behavior. For instance, a unit test might verify that a function correctly adds two numbers.

-

Integration Test: Evaluates the interaction between different parts of the software to ensure they work together as expected. An example would be testing the process of user registration through an application's front end to its database.

-

Functional Test: Focuses on the business requirements of an application. It might test a complete form submission process on a web application, ensuring that all steps from entering data to receiving a confirmation work correctly.

-

Performance Test: Assesses the speed, responsiveness, and stability of the application under a certain load. An example is simulating multiple users accessing a web service simultaneously to ensure it can handle the traffic.

-

Security Test: Identifies vulnerabilities in the software, protecting against attacks and unauthorized access. This could include testing for SQL injection vulnerabilities in a web application.

-

UI/UX Test: Verifies that the user interface looks and functions as expected across different devices and browsers. For example, ensuring that a web page displays correctly and all buttons are functional on both desktop and mobile browsers.

Check also Automation Test Plan Template.

How to write test cases to make testing easy to automate?

Software developers are lazy, and it is a good thing!! This sentence is commonly used to shock or wake up the audience of many conferences and events. And it is actually true - both parts. If it wasn’t for this laziness, or stating it more professionally - eagerness for optimization, the progress within the IT world would have been much slower.

The will and ability of automating repeatable tasks is one of the cores of programming. If you are to create a hundred of the same objects or entities in your app, would you do it by hand? Or would you rather create a script that will do it for you? It is the same with creating tests and test cases. And this is where test automation comes in.

Read also [Test Case vs Test Scenario](Test Case vs Test Scenario).

Test automation is a practice of using specific tools and scripts to automate the executing the testing process for a given application. This rather self explanatory definition gives us one piece of information that will be significant in this article.

“Specific tools and scripts” are the words. Creating proper scripts and cases is crucial to make test automation happen. Before we do that, let’s focus for a moment on why we would actually automate our tests.

-

It saves you time and money - you can execute and re-execute the test script at any time, basically with a click of a button and it reduces the man hours needed for the testing effort.

-

Makes your team members happy - since they are not redoing the same tasks over and over again.

-

It provides security - automated tests are less prone to error than the manual tests.

-

Increases the test coverage - if you are able to automate the biggest number of test scenarios, you can test manually the less common ones.

Things to do before you write automated test cases

As you already know from our other articles, test cases are not just there floating around in the testing universe. They come out from a test scenario or a test suite. It means that while deciding what you actually want to test, you need to create a high level plan first.

Test scenario is a description of a user behaviour pattern. Usually such actions touch multiple functionalities at a time. From a test management perspective it means that one test scenario will make you test several parts of your software.

After you identify the test scenarios you need to actually decide on how many tests should be automated. Why “how many” and not “whether all”? Well even though automating all tests sounds efficient and smart, it is quite the opposite. Writing an automation test case for something that will occur only once per testing process is a waste of time. Here are a few pointers on how to decide if automation is the best way:

-

If a functionality covered by the given test is crucial from the business perspective

-

If a functionality covered by the given test is used with high frequency

-

If a test needs multiple data sets

-

If a test that have the tendency to be influenced by human error

-

If a test is undoable manually or takes a lot of effort to cover it manually

-

If a test is highly repeatable and runs on multiple builds

Another aspect that you need to consider is the test automation tool itself. It is significant to understand its pros and cons as well as the limitations. It is rather safe to assume that you will be using the same tool during the whole testing process. Therefore you are preparing the cases for that specific software.

How to create a test case for automation testing?

It is a slightly different job to create an automated test case if you compare it to the manual one. The key being the actual tester. A manual test case is created by a human for a human. Every manual test case needs some elements that we would use to report or maintain order in test execution. For example the title or the description. On the other hand if you want to create automated tests, the best practices suggest to follow those guidelines while writing automated tests:

-

Preconditions: you need to describe what are the entry requirements or the entry state of the application that would allow you to run the test. For example if and which browser you use, do you need to log in or enter a specific subpage.

-

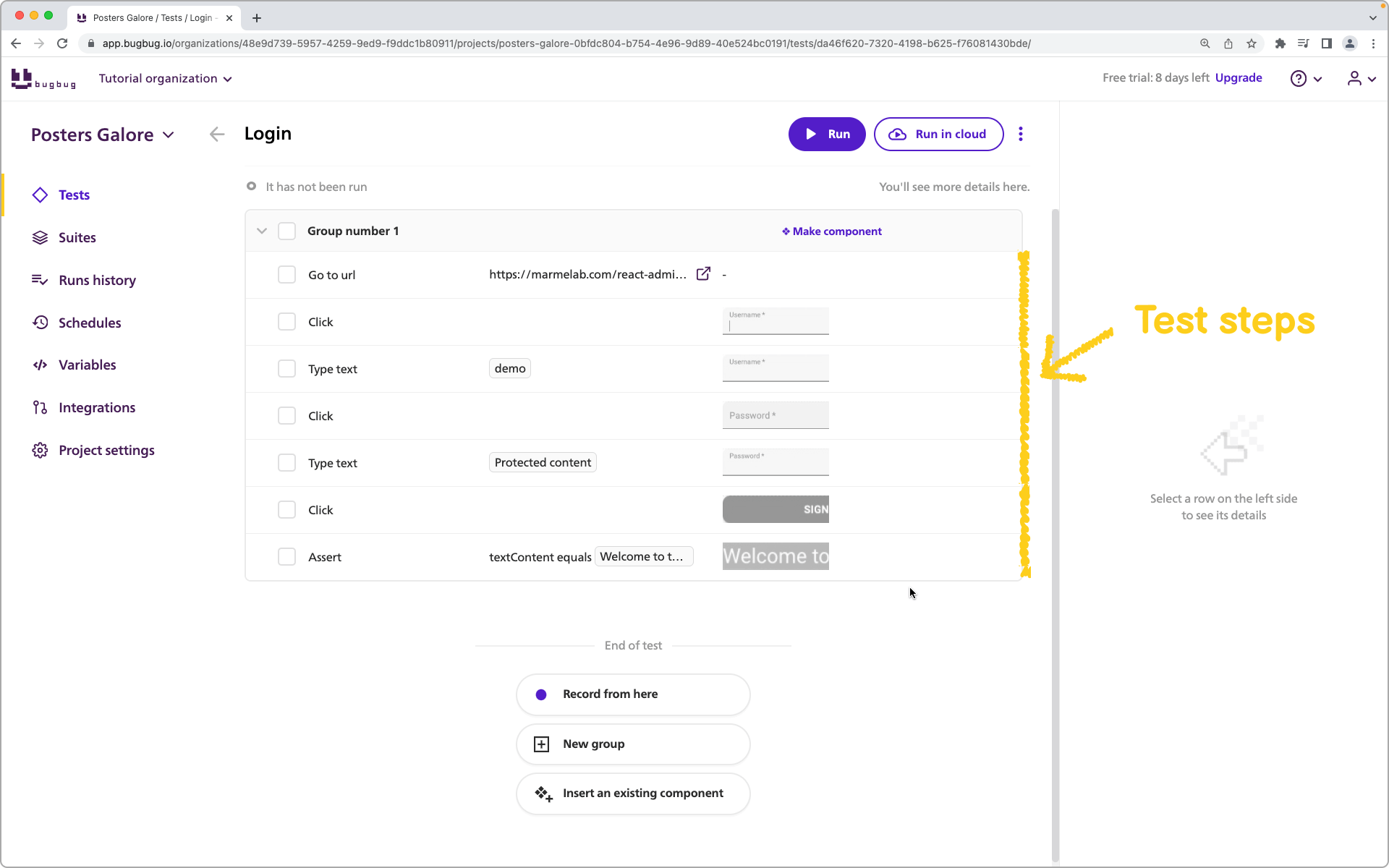

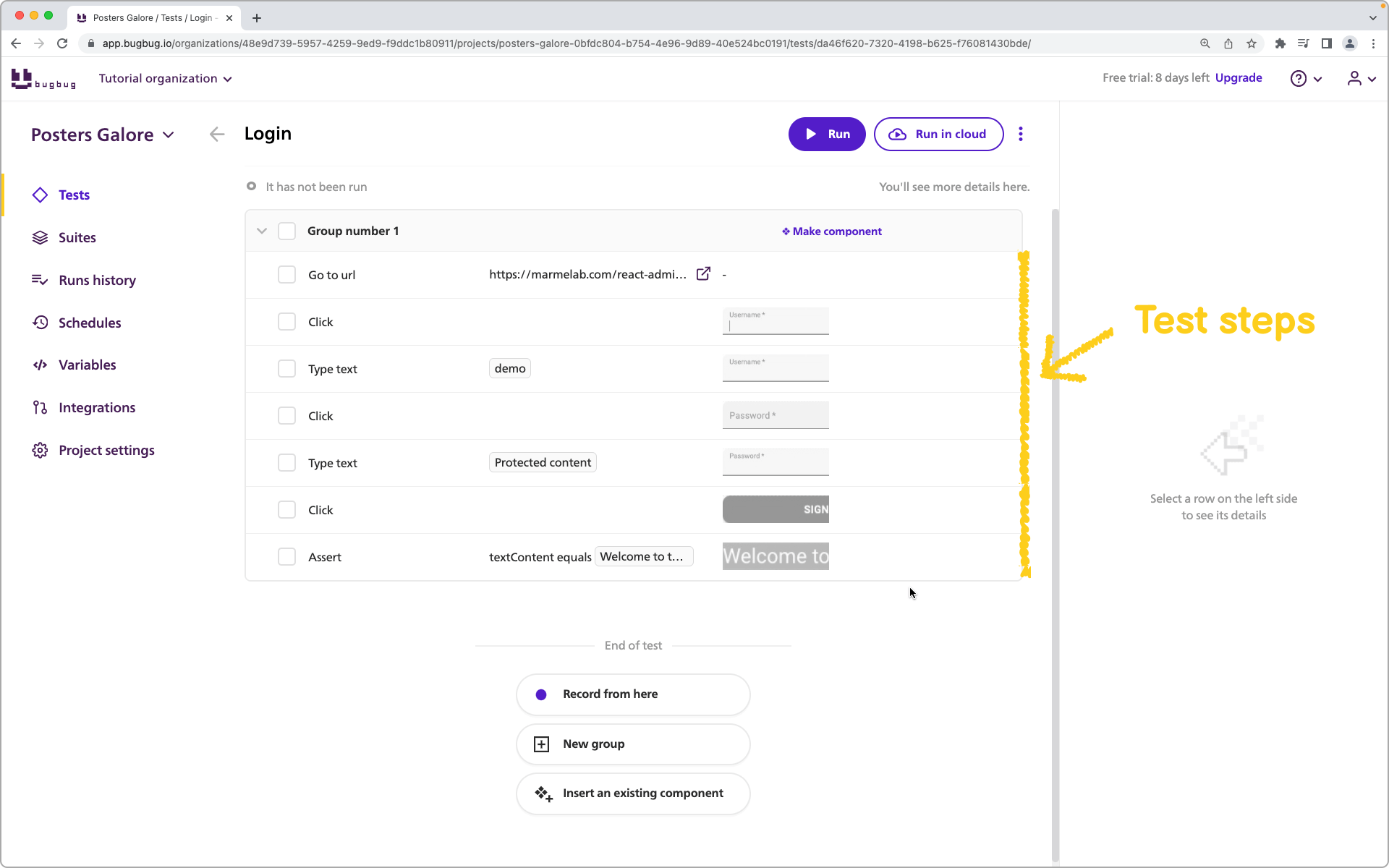

Test steps: the instruction on what actions are required to reach the desired state of the app as well as the necessary test data.

-

Sync and wait statements: this one has a little asterisk on it. In the “good ol’ days” you needed to define when and how much time is necessary for the application to do its job before running each stage of the test. If your tests were faster than your app was, the results got highly inaccurate. Right now, modern automation tools like BugBug rather than sync and wait, they react to what is actually happening in the app. You define what elements should be visible, and if they occur, the test proceeds.

-

Comments: they enable understanding and knowledge transfer by describing the approach of the current software test.

-

Debugging statements: They are used to aid in identifying and troubleshooting issues or errors that occur during the execution of automated tests. They might be log entries or warnings in your test automation tool. Try to be as thorough here as you can since it will help the devs to debug the issue.

-

Output statements: They are used to report the outcome of each test case. Here you can place the mythical “test failed successfully” message for a negative test.

Do note the important difference between the last two bullets. The debugging statements are invoked during the test runs - if a bug occurs. The output ones are logged after every completed test.

Above: Test steps - part of an automated test case, created in BugBug testing tool.

Best practices in creating test cases to automate the process

We have a few best practices for you. The Fab Five of test automation.

-

KISS them! The "keep it simple, stupid" rule is in place here as well. Create your test cases in a way that everyone involved will understand them. You need to be sure that what you write and how you write is clear for the test team. If you have the time and resources to do so, it would be wise to create a manual or a guide that will keep everyone on the same page. What would you put there? A glossary or a list of abbreviations you will use in your cases. 2.Start with the scenario. We mentioned this one many times in our articles. A good test scenario will help you to create good test cases. You will be able to select the right functionalities, the right order etc. A good test automation ROI starts with the scenario. If you do not put much effort into it, you will have a tough time making the right test cases.

-

General and precise - tightrope and feather. This one comes with experience. Achieving perfect balance between being general and detailed is like walking a tightrope with a feather. As a creator of the test case you need to feel and understand how much information allows you to test a functionality and how much will be well... too much. There is no point in limiting your testers' flexibility. Also limiting the information is the best way to increase the adaptability to the software change. For example if you write "hover mouse to upper right corner over the login button" and in the future the login button will be moved to the left, the test case will need to be changed. Whereas "hover mouse over the login button" is much more universal.

-

Encourage exploration. We start with a shocker - nobody is perfect! Teams usually create test cases in a quick manner and have the tendency to work by following patterns and experiences. This way the test coverage of an app never reaches 100%. And it never will. The thing is - assume that you have some test cases missing and start exploring. Test cases serve to verify the bugs you have thought might occur. What about those that slipped your mind while developing? Make sure your scenario and your automation plan has the flexibility to add / change test cases. Create a culture of test case development if you will.

-

Independence! This is our good practice, that we teach to all BugBug clients. It is significant that you design your test cases in a way that they are independent from each other. If your test A needs a test B to run properly, the risk of false results increases. That way you can also unnecessarily enlarge the testing time, since linking tests together prevents them from working parallelly.

Example cases for test automation The following phrase is best read using your best “shopping channel impersonation”. Give it a shot!

We have an exclusive offer for our most dedicated readers! We will give you example test cases for not one, not two, but three, yes you read it right THREE! types of testing.

Fun wasn’t it? 🙂

Coming back to being test automation pros. We actually did prepare three test cases for you.

- Functional testing. As you remember, functional testing checks if a specific functionality works correctly.

Preconditions: The user is not logged in. The app is on its home page

Test steps: Click the login button. Wait for the form to appear. Enter “test_user” as the username and “test_pa$$word” as the password. Click the “Login” button.

Comments: This is the base test for user authentication.

Debugging statements: If the user was not logged correctly, log “user authentication failed” and if there were any prompts at the login form, copy them to the log.

Output statements: For passed test log “User authentication successful”.

An example of a passed test with a proper output statement.

- Integration testing. Keeping it short - integration testing aims to verify if different parts of the software or any connection to an external service is able to communicate and cooperate properly.

Preconditions: The user is not logged in. The app is on its home page.

Test steps: Click the login button. After the form appears, click the “Login via social media” button. Check the URL for /profile and check the content for “Hello test_user”.

Comments: Verify if the user is on the proper URL post social login authentication.

Debugging statements: Log proper information if any of the two conditions occur. “Wrong URL” if the user is not on “/profile” and “Wrong greeting” if the “Welcome test_user” is not visible.

Output statements: For passed test log “Transfer to profile successful”.

An example of a failed test with proper debugging statements.

- Regression testing. Remember? The one you perform to check if new functionalities are not interfering with the old ones.

Preconditions: The user is not logged in. The app is on its home page

Test steps: Click the login button. Wait for the form to appear. Enter “test_user” as the username and “test_pa$$word” as the password. Click the “Login” button. If the welcome animation is launched and then the user is on the “/profile” URL

Comments: Verify if the user sees the animation and then is directed to the profile. The profile should not have the greeting anymore.

Debugging statements: Log proper information if any of the failure conditions occur: “no animation visible” for no animation, “wrong URL” if the user is not logged to “/profile” URL, “greeting visible” if the “welcome test_user” greeting is visible.

Output statements: For passed test log “Transfer to profile successful”.

Summary - creating cases for automated testing process

Automated test cases require a specific tool that is functional for any programming language. They allow you to quickly run tests over and over again for example after every build or sprint your team completes. They reduce testing time. Time that you can spend writing code and completing your product in a more efficient way.

Manual and automated test cases have some common elements, but there are 6 key elements that should be included if you are using the automation. Those are: preconditions, test steps, sync and wait, comments, debugging statements and output statements. Make sure you check our best practices and examples above (mostly speaking to those readers who tend to start the article from the summary).

Running automated tests instead of the manual ones is the more efficient way most of the time. There are some tests that need the manual approach, but this would be a topic for a completely different article.