🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

- General AI Model Evaluation Methods

- What Does Accuracy Mean in Software Testing?

- False Positives vs. False Confidence

- Why AI Explainability Is Non-Negotiable for QA Teams

- What Is Bias in AI Models

- When AI Should Not Decide Test Priority

- What Responsible AI Means

- Conclusion: AI Should Support Testers, Not Replace Judgment

- Where BugBug Fits In

AI has moved fast in software testing. In just a few years, tools that once focused on deterministic automation now promise test generation, failure prediction, self-healing scripts, and intelligent prioritization.

For QA teams, this creates a familiar tension: curiosity mixed with skepticism.

On one hand, AI can reduce repetitive work and surface useful signals. On the other, opaque decisions, hallucinated insights, and overconfident dashboards introduce new risks into an already fragile part of the software development lifecycle.

This article is not about whether AI belongs in testing. It’s about how to evaluate AI responsibly — using criteria that make sense for QA teams operating under real release pressure, not machine learning labs chasing benchmark scores. Model evaluation is a process—a systematic series of steps—to determine if an AI model is suitable for deployment, safe enough to use, or worth further investment.

Check also:

General AI Model Evaluation Methods

Before AI systems are embedded into testing tools, CI pipelines, or production workflows, they should be evaluated at the model level, independently of any specific vendor claims. This is especially important with generative AI and large language models, where impressive outputs can mask structural weaknesses.

At a high level, AI model evaluation rests on three principles: performance, explainability, and fairness. These apply regardless of whether the model is used for test generation, prioritization, analysis, or reporting.

Performance Evaluation for the Given Task

The first question is whether the model performs well for its given task — not in general, but in context.

Key considerations include:

- Task type (classification, generation, ranking, summarization)

- Whether the neural network is evaluated on the same data types it will encounter after model deployment

- How consistently the model performs when inputs vary slightly

Models trained on big data can appear robust while failing under realistic conditions if the task type during evaluation does not match real usage.

Start website testing in 5 min

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Data-Centered Evaluation: Sources and Representation

Many AI failures originate not in model training, but in data selection.

Evaluation should examine:

- Whether training data is diverse and representative

- Which data sources dominate the dataset

- Whether minority groups are underrepresented or oversimplified

Lack of diversity often leads to out group homogeneity bias, where the model treats distinct cases as interchangeable. This can surface as stereotyping bias, gender bias, or reinforcement of harmful stereotypes, even when the task appears neutral.

Bias Detection and Measurement

AI model evaluation must explicitly look for potential biases, not assume neutrality.

Common forms include:

- Measurement bias, where labels or signals are systematically skewed

- Confirmation bias, where the model reinforces dominant patterns

- Bias amplification during inference, especially in generative systems

Quantitative fairness metrics can help, but they are not sufficient on their own. Metrics must be interpreted in relation to task impact, affected users, and risk tolerance — particularly when outputs may influence decisions affecting minority groups.

Explainability and Interpretability

Making AI systems explainable is critical once models leave research environments and enter real products.

Evaluation should ask:

- Can humans understand why a model produced a specific output?

- Can decisions be traced back to inputs or training signals?

- Are explanations stable across similar inputs?

Explainability is especially important for organizations operating under regulatory compliance constraints, where automated decisions must be justified and auditable.

From Evaluation to Deployment

A final, often overlooked step is evaluating how model behavior changes after deployment.

Models that perform well in isolation may degrade when:

- Integrated with new data sources

- Exposed to different input distributions

- Used by non-expert users

Responsible organizations treat AI model evaluation as a continuous process, revisiting assumptions as models evolve, data changes, and usage expands.

Why This Matters for QA and Engineering Teams

Even when QA teams do not train models themselves, understanding these evaluation methods helps them:

- Ask better questions about AI-powered tools

- Recognize bias and risk earlier

- Avoid over-trusting opaque systems

Ultimately, AI models should be judged not by how impressive they appear, but by how reliably and fairly they behave in the environments where teams depend on them.

💡 Also check:

What Does Accuracy Mean in Software Testing?

Bottom line: In QA, accuracy is not a model metric. It’s the ability to trust test results when a release decision is on the line.

Many AI-powered testing tools talk about accuracy in machine learning terms: prediction rates, confidence scores, or pattern recognition quality. In machine learning, model performance is typically measured using evaluation metrics like accuracy, precision, recall, and F1-score to assess how well a model predicts outcomes. Those metrics matter to data scientists, but they don’t answer the questions testers actually care about.

In software testing, accuracy means:

- Did the test catch a real defect?

- Can we reproduce it?

- Will the signal remain stable tomorrow?

- Can we confidently ship based on this result?

In classification tasks, accuracy measures overall correct predictions.

A tool can be “accurate” by ML standards and still be unreliable in practice.

Why ML Accuracy Metrics Don’t Translate to QA

Machine learning models are evaluated in controlled conditions. QA happens in unstable ones.

Test environments change. Feature flags flip. Test data evolves. UI selectors break. CI pipelines introduce timing issues. An AI model trained on historical data can appear accurate while quietly drifting away from present-day risk.

This creates a mismatch:

- ML accuracy optimizes for correctness on past data

- QA accuracy requires reliability under future uncertainty

A testing tool that misses one critical regression is more dangerous than a noisy tool that fails loudly and predictably.

What Accuracy Looks Like for QA Teams

From a practitioner’s point of view, accuracy is a combination of qualities:

- Correct defect detection – failures correspond to real issues

- Result stability – outcomes don’t change without a meaningful reason

- Reproducibility – failures can be validated across environments

- Predictable failure modes – when the tool is wrong, it’s wrong in understandable ways

The reliability of the model's predictions is central to QA accuracy, as teams must trust and interpret these outputs to ensure effective quality assessment.

Model evaluation is the process of assessing how well an AI system performs on a specific task.

If an AI system improves dashboards but erodes these properties, it is reducing quality — not improving it.

False Positives vs. False Confidence

Bottom line: QA teams know how to handle false positives. False confidence is the real danger.

Traditional automation has always produced noise. Flaky tests, environment hiccups, timing issues — these are familiar problems. False positives are visible, debuggable, and uncomfortable enough that teams learn to manage them.

AI changes the failure mode. Interpreting evaluation results correctly becomes crucial, as misreading these results can lead to misplaced trust in the model's performance and increased risk.

Evaluation is central to building trust in AI systems.

How AI Introduces False Confidence

AI-powered testing tools often wrap results in confident language and automated decisions:

- “Low-risk change detected”

- “No regression impact expected”

- “Failure automatically resolved”

These summaries feel authoritative, even when they’re based on weak or incomplete signals. For decision makers, it’s crucial to maintain human oversight and interpret AI decisions critically, as explainability should align user trust with the system’s actual reliability—not just increase trust.

Other common patterns include:

- Automatically dismissing failures without clear audit trails

- Reprioritizing or skipping tests silently

- Abstracting raw results into confidence scores instead of evidence

Human-in-the-loop evaluation involves real people reviewing AI outputs to assess accuracy or relevance, providing an essential check on automated decisions.

None of this removes risk. It just hides it.

Start website testing in 5 min

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Why False Confidence Is So Dangerous

False confidence shows up exactly when QA vigilance matters most:

- Late-stage releases

- High-frequency CI pipelines

- Small teams relying heavily on automation

In these scenarios, understanding the decision making processes of AI models is crucial. Transparency in how conclusions are reached helps teams avoid misplaced trust and ensures that results are interpreted correctly.

When results look “handled,” fewer people ask questions. Over time, teams stop interrogating outcomes — not because the tool is accurate, but because it sounds certain.

That’s not quality. That’s misplaced trust.

Human-in-the-loop evaluation helps build trust in AI systems by ensuring stakeholders understand how models were tested and what the results mean.

Why AI Explainability Is Non-Negotiable for QA Teams

Bottom line: If a tester can’t explain an AI decision, they can’t defend it.

Every test result eventually faces a human conversation: a stand-up, a release review, a post-incident analysis. When artificial intelligence enters the workflow, the need for explainability, trust, and transparency in these systems becomes critical. Accountability doesn’t disappear — it becomes harder to maintain.

Explainable AI (XAI) aims to provide humans with the ability to understand the reasoning behind AI decisions.

What Explainability Means in Testing

In QA, explainability is simple and practical. At any point, teams must be able to answer:

- What did the tool do?

- Why did it do it?

- What information was it based on?

Local explanations help clarify why an AI model made a specific decision for a particular instance, increasing transparency and trust.

Explainability is essential in high-stakes domains and can be ensured using tools like SHAP or LIME.

Explainability is not about exposing model internals. It’s about connecting decisions to observable signals testers already understand.

Explainability vs. Transparency vs. Observability

These are related but distinct:

- Transparency shows that AI is involved

- Observability exposes logs and events

- Explainability reveals reasoning

Different methods are used to achieve explainability, transparency, and observability, including technical explanations and explainability techniques.

A tool can be transparent and observable while still being impossible to reason about. QA teams don’t just need data — they need causality.

Hybrid evaluation strategies combine automated metrics with human reviews to balance cost, scale, and accuracy.

The Cost of Non-Explainable AI

When explainability is missing, teams compensate by:

- Rerunning entire suites “just in case”

- Manually validating AI decisions

- Losing trust in automation while still paying for it

For ai technologies, explainability is crucial to ensure that users and stakeholders understand, trust, and effectively validate model decisions.

Explainability is what keeps AI assistive instead of quietly authoritative.

XAI can improve user experience by helping end users trust that the AI is making good decisions.

What Is Bias in AI Models

Bottom line: Bias in AI testing tools doesn’t target users — it targets scenarios.

In software testing, bias usually means the system overvalues what happens often and undervalues what happens rarely. That’s a problem, because serious defects almost never live on the happy path. Addressing bias requires proactive strategies, continuous evaluation, and ethical considerations to ensure fairer AI outputs and reduce unintended societal impacts.

Bias and Fairness Testing ensures equitable outcomes across different demographic groups.

Common Sources of Bias in Testing Tools

Bias emerges naturally from:

- Historical test data

- Frequently executed user flows

- “Cleaned” test suites that removed difficult cases

Bias can also be introduced during data collection, through unrepresentative or non-diverse samples, as well as through the training data used to build AI models. Additionally, data labeling processes can reinforce or introduce bias, especially if labeling guidelines or annotator perspectives are inconsistent.

AI systems can internalize implicit biases from their training data, leading to prejudiced outputs.

Over time, AI systems learn a distorted picture of risk.

How Bias Reduces Real Coverage

Bias often manifests as:

- Certain tests being consistently deprioritized

- Edge cases rarely executed

- New features inheriting outdated risk profiles

- Accessibility and negative paths being under-tested

Bias can occur at various stages of the AI development process, including data collection, training, and evaluation.

Dashboards look healthier. Execution times drop. Coverage quietly shrinks.

It is crucial to identify bias during the evaluation process to ensure model fairness and reliability.

Modern evaluation screens for demographic bias and toxic outputs to comply with ethical standards.

Why Small Teams Are Most Exposed

Small teams rely heavily on automation and historical signals. When AI bias creeps in, there may be no dedicated role left to challenge it — until a production incident exposes the gap.

AI requires a comprehensive and multifaceted approach to bias mitigation, especially for small teams that may lack specialized resources.

Bias isn’t a bug. It’s a constraint that must be actively managed.

Addressing bias in AI requires a continuous feedback loop where models are regularly evaluated and updated based on real-world interactions.

When AI Should Not Decide Test Priority

Bottom line: Some testing decisions are too risky to automate away.

AI can assist prioritization, but it should not independently decide coverage in high-risk areas. When building AI systems, it's crucial to integrate evaluation and human oversight to ensure responsible and trustworthy outcomes.

High-Risk Areas That Should Remain Human-Owned

- Checkout, payments, and billing

- Authentication and authorization

- Compliance and legal workflows

- New or recently modified features

In these high-risk areas, domain experts should review AI model outputs to ensure accuracy, reliability, and trustworthiness.

Historical stability is not a safety signal in these areas.

Human-in-the-loop evaluation involves domain experts or trained annotators reviewing model outputs to provide qualitative feedback or ground truth comparisons.

Assistive vs. Authoritative AI

Assistive AI suggests. Authoritative AI decides.

In QA, the second is rarely acceptable. Incorporating a human-in-the-loop approach is essential for maintaining oversight, ensuring that human judgment and domain expertise guide critical evaluation workflows.

A useful rule of thumb:

If skipping a test would require a conversation in a release meeting, AI should not skip it silently.

Human-in-the-loop evaluation is particularly useful for subjective tasks, such as content moderation or summarization.

What Responsible AI Means

Bottom line: Responsible AI reduces risk without removing human judgment.

A practical responsible AI definition for QA teams is simple:

Responsible AI is predictable, explainable, and subordinate to human decision-making. Responsible artificial intelligence also incorporates principles like fairness, transparency, safety, privacy, and accountability.

Responsible tools:

- Keep humans in the loop by default

- Prefer conservative automation

- Degrade gracefully when uncertain

- Expose their assumptions instead of hiding them

It is also important to communicate evaluation results in a clear and accessible way to non technical stakeholders, ensuring transparency and trust in AI systems.

Often, less AI produces better quality — because testers retain situational awareness.

Responsibility is not a feature. It’s an ongoing practice.

Meeting legal standards is essential, especially in sensitive domains like healthcare, finance, and law, to ensure accountability and trustworthiness. Compliance with standards like the EU AI Act and NIST AI Risk Management Framework is critical for high-risk AI systems as of 2026. Model evaluations can be part of AI governance strategies to ensure compliance with established standards.

Conclusion: AI Should Support Testers, Not Replace Judgment

AI can improve software testing — when it is evaluated rigorously and constrained deliberately. In real world applications, thorough model evaluation ensures that AI-driven testing tools are reliable, safe, and fair in operational environments.

Accuracy in QA is about trust, not metrics. Explainability is about accountability, not curiosity. Bias is about coverage gaps, not intent.

For example, a team that rigorously evaluates their AI model for both technical performance and ethical impact can better align their testing outcomes with business goals and regulatory requirements.

The teams that benefit most from AI won’t be the ones that automate the fastest. They’ll be the ones that stay skeptical, intentional, and in control.

Effective AI model assessment in 2026 requires balancing technical metrics with business impact and ethical considerations.

Where BugBug Fits In

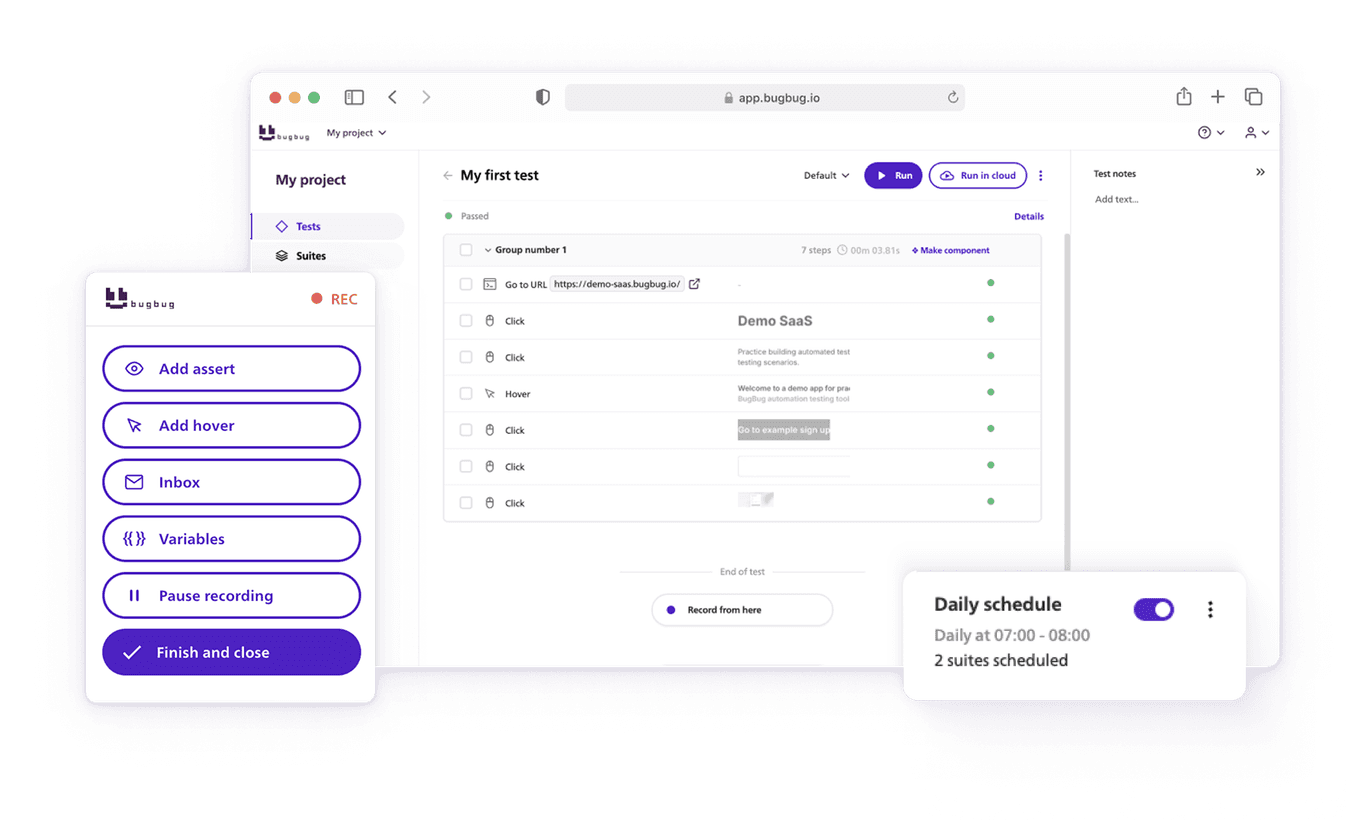

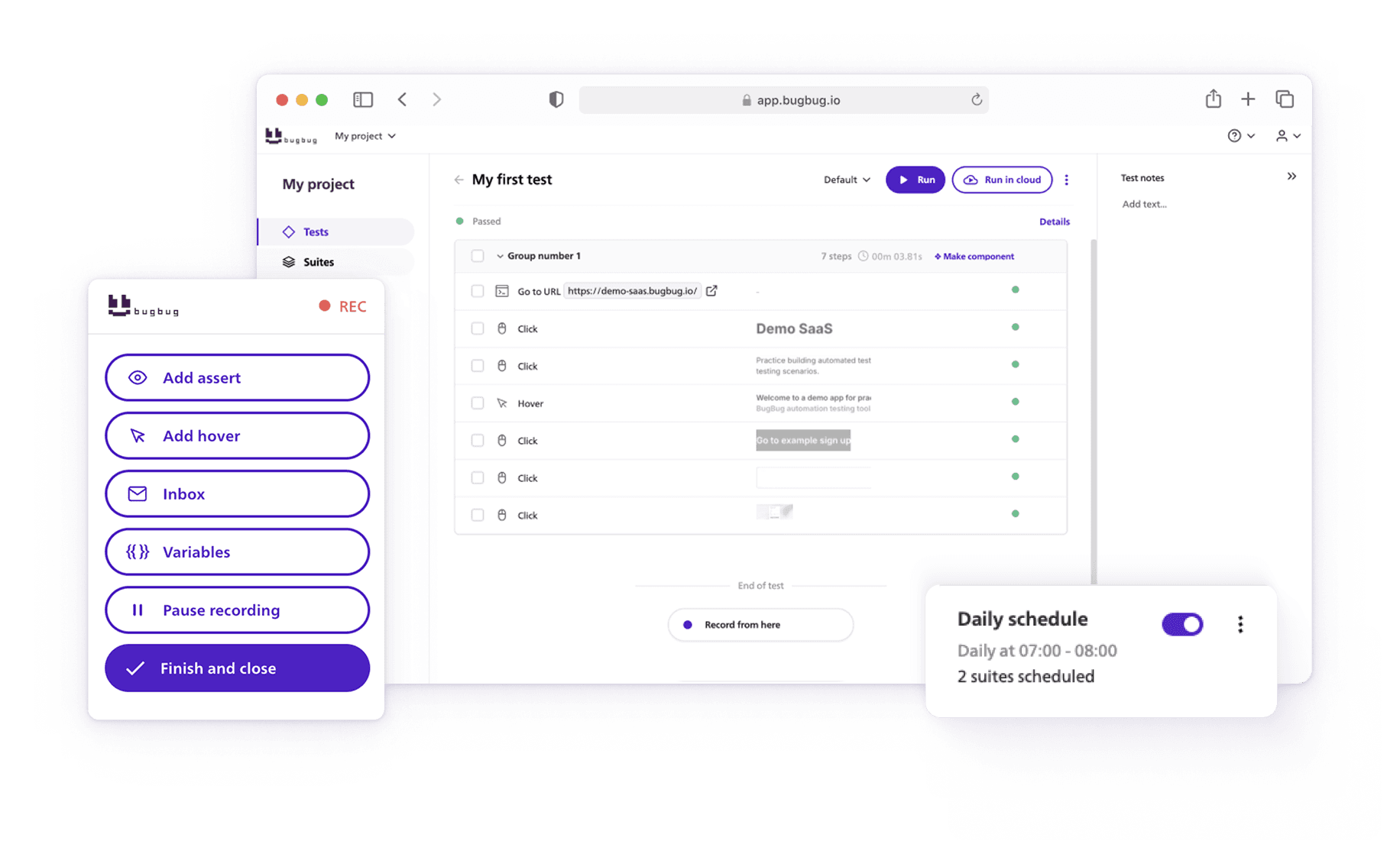

Tools like BugBug take a deliberately conservative approach to automation — prioritizing clarity, predictability, and human control over opaque intelligence.

Instead of relying on black-box AI decisions, BugBug focuses on:

- Readable, maintainable test automation

- Deterministic behavior teams can reason about

- Fast feedback without hidden prioritization logic

For teams that want automation without surrendering judgment — especially small and mid-size engineering teams — this approach often proves more sustainable than fully AI-driven testing stacks.

Happy (automated) testing!