🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

- 🎯 TL;DR - Do You Need AI Testing Framework?

- AI Testing Frameworks - The Next Buzzword in Software Testing

- What Is an AI Testing Framework? (And Why the Term Is Overused)

- Why AI Testing Frameworks Exist at All

- The Reality for Small QA Teams: Where AI Testing Frameworks Become Friction

- AI Evaluation and Explainability: The Hard Problems Frameworks Don’t Eliminate

- Do You Actually Need AI in Your Testing Stack?

- What Consistently Improves QA Results (Without an AI Testing Framework)

- Where BugBug Fits: Achieving the Benefits Without the Framework Overhead

- When an AI Testing Framework Might Actually Make Sense

🎯 TL;DR - Do You Need AI Testing Framework?

- AI testing frameworks don’t fix broken automation — they assume stable, well-maintained tests and often amplify existing flakiness and noise.

- Most QA teams struggle with fundamentals, not AI gaps: brittle tests, high maintenance costs, slow CI feedback, and excessive manual regression.

- AI frameworks add overhead for small teams, introducing setup, evaluation, explainability, and governance work that often increases manual effort.

- Explainability and trust are major blockers — probabilistic AI decisions are harder to justify than deterministic test failures, pushing teams back to manual checks.

- Better automation beats smarter automation — reliable, readable tests, faster feedback, and reduced manual regression deliver higher ROI than AI orchestration layers.

Check also:

AI Testing Frameworks - The Next Buzzword in Software Testing

Vendors promise smarter test creation, automated decision-making, and fewer manual QA tasks — all powered by artificial intelligence.

But when you look at how most QA teams actually work, a different picture emerges.

Most teams aren’t blocked by a lack of AI. They’re blocked by:

- Slow and brittle automated tests

- Too much manual regression

- High maintenance cost of existing test suites

- Constant context switching between tools

These are key pain points in test automation that directly impact productivity and effectiveness. Brittle tests, which frequently fail due to minor changes, require ongoing test maintenance and can slow down the testing process.

An AI testing framework doesn’t magically fix these issues. In many cases, it adds another layer of complexity on top of them. Test maintenance is the most time-consuming part of the test automation process, especially when using open-source frameworks.

This is where the disconnect starts. A robust testing infrastructure is essential for addressing these pain points, and AI frameworks do not replace the need for strong fundamentals.

What Is an AI Testing Framework? (And Why the Term Is Overused)

In theory, an AI testing framework is a structured system that defines how artificial intelligence is used across the testing process.

In practice, the term is used far more loosely.

Depending on who you ask, an AI testing framework might mean:

-

A collection of AI-powered testing tools

-

A machine learning pipeline that analyzes test results

-

A layer that generates or prioritizes test cases

-

A governance model for “human-in-the-loop” testing

-

A comprehensive test management platform that streamlines test planning, creation, execution, and maintenance

This lack of clarity is already a red flag.

AI testing frameworks have evolved from simple script execution to intelligent systems capable of autonomously creating, maintaining, and analyzing software tests.

At its core, an AI testing framework does not test your software. It coordinates how AI models and tools are applied to testing activities such as:

- Test generation

- Failure analysis

- Risk-based prioritization

- Test coverage recommendations

- Test planning and management

The critical distinction is this:

A framework orchestrates AI — it does not improve test quality by default.

Seamless integration with CI/CD and development workflows is a key feature of modern AI testing frameworks.

If your tests are flaky, slow, or poorly scoped, an AI testing framework will not fix that. It will simply operate on top of unreliable signals and produce confident-looking output.

This is why many teams adopt AI frameworks and still struggle with:

- Low trust in test results

- Hard-to-explain decisions

- Increased manual review instead of less

The framework didn’t fail — the assumption did. AI frameworks assume strong testing fundamentals. Without them, they amplify existing problems rather than solve them.

Why AI Testing Frameworks Exist at All

AI testing frameworks didn’t appear out of nowhere. They are a response to very real problems — just not the problems most small QA teams have.

In large organizations, testing environments tend to look like this:

- Tens of thousands of automated tests

- Multiple teams committing changes daily

- Long-running CI pipelines

- Hundreds of test failures per release, many of them unrelated to actual product risk

At that scale, traditional automation alone stops being effective. Teams can’t manually analyze every failure, decide what matters, or prioritize coverage intelligently. This is where AI testing frameworks start to make sense. In fact, 81% of development teams use AI in their testing workflows to manage complexity and scale.

Their core promise is coordination:

- Use AI to group similar failures and reduce noise

- Predict which tests are likely to fail

- Prioritize execution based on risk and historical data

- Recommend where coverage is missing

AI can significantly impact the most time-consuming steps in the automated testing process, particularly test creation and maintenance.

In theory, this reduces human effort and increases signal quality.

But there’s an important, often unstated assumption behind all of this:

You already have a mature, stable, well-maintained automation setup.

AI testing frameworks assume:

- Your tests mostly work

- Failures are meaningful signals, not random noise

- Historical data reflects real product behavior

- Teams have time to evaluate and trust AI-driven decisions

Automated tests can quickly become obsolete without proper maintenance, leading to false-positive test failures.

Without these foundations, AI frameworks don’t create clarity — they create interpretation work.

This is why AI testing frameworks emerged first in enterprises, not startups. They’re designed to manage complexity at scale, not to bootstrap quality from scratch. Teams using open-source frameworks often spend more time on test creation and maintenance, even with AI assistance.

The Reality for Small QA Teams: Where AI Testing Frameworks Become Friction

For small and mid-sized QA teams, the reality is very different.

Most teams are operating under constant constraints:

- Limited automation coverage

- Tight release deadlines

- Frequent UI changes

- No dedicated roles for AI evaluation or governance

Limited technical expertise within these teams can make it difficult to configure and maintain AI testing frameworks, especially when advanced setup or troubleshooting is required.

In this context, introducing an AI testing framework often creates more problems than it solves. AI testing frameworks may not fully address complex scenarios that require advanced testing capabilities, such as intricate user flows or testing across multiple environments.

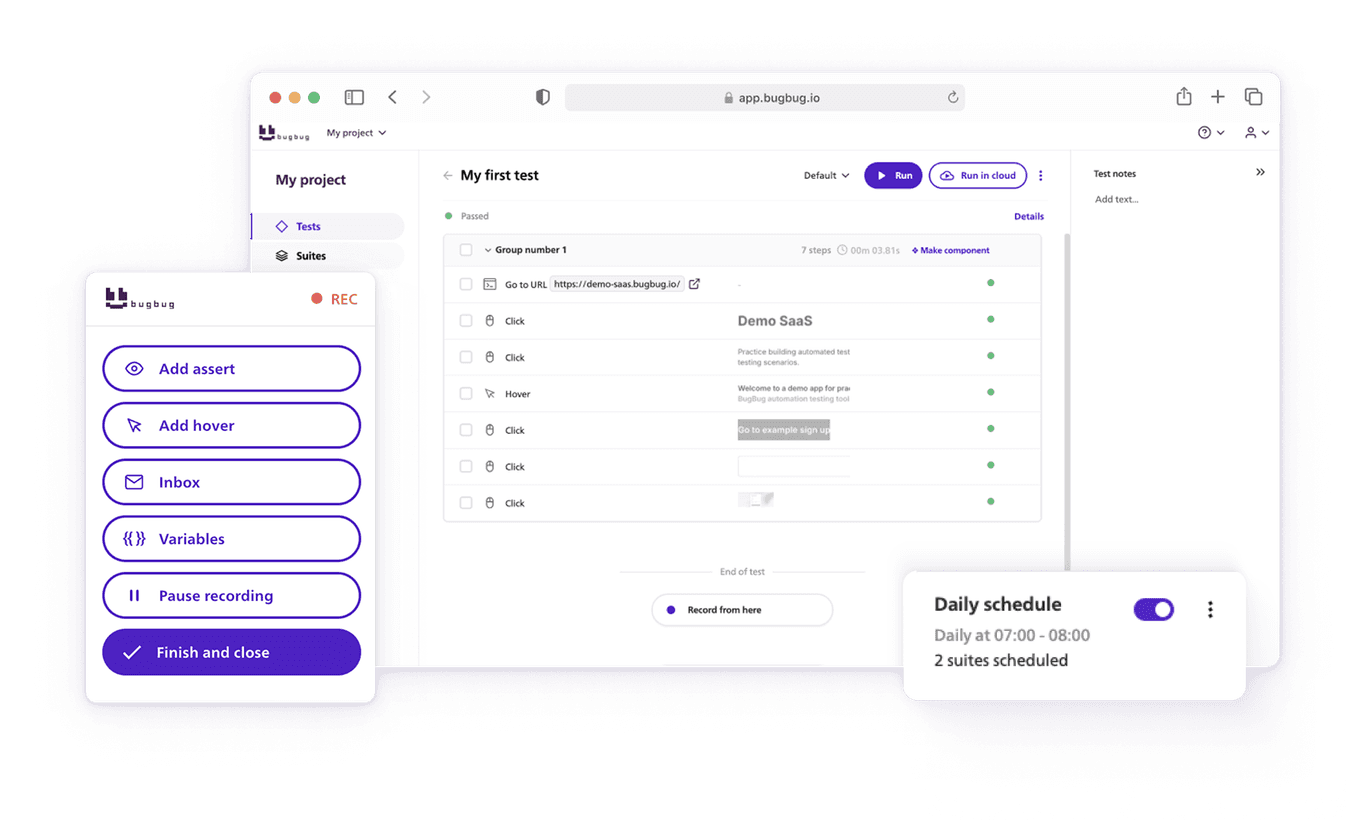

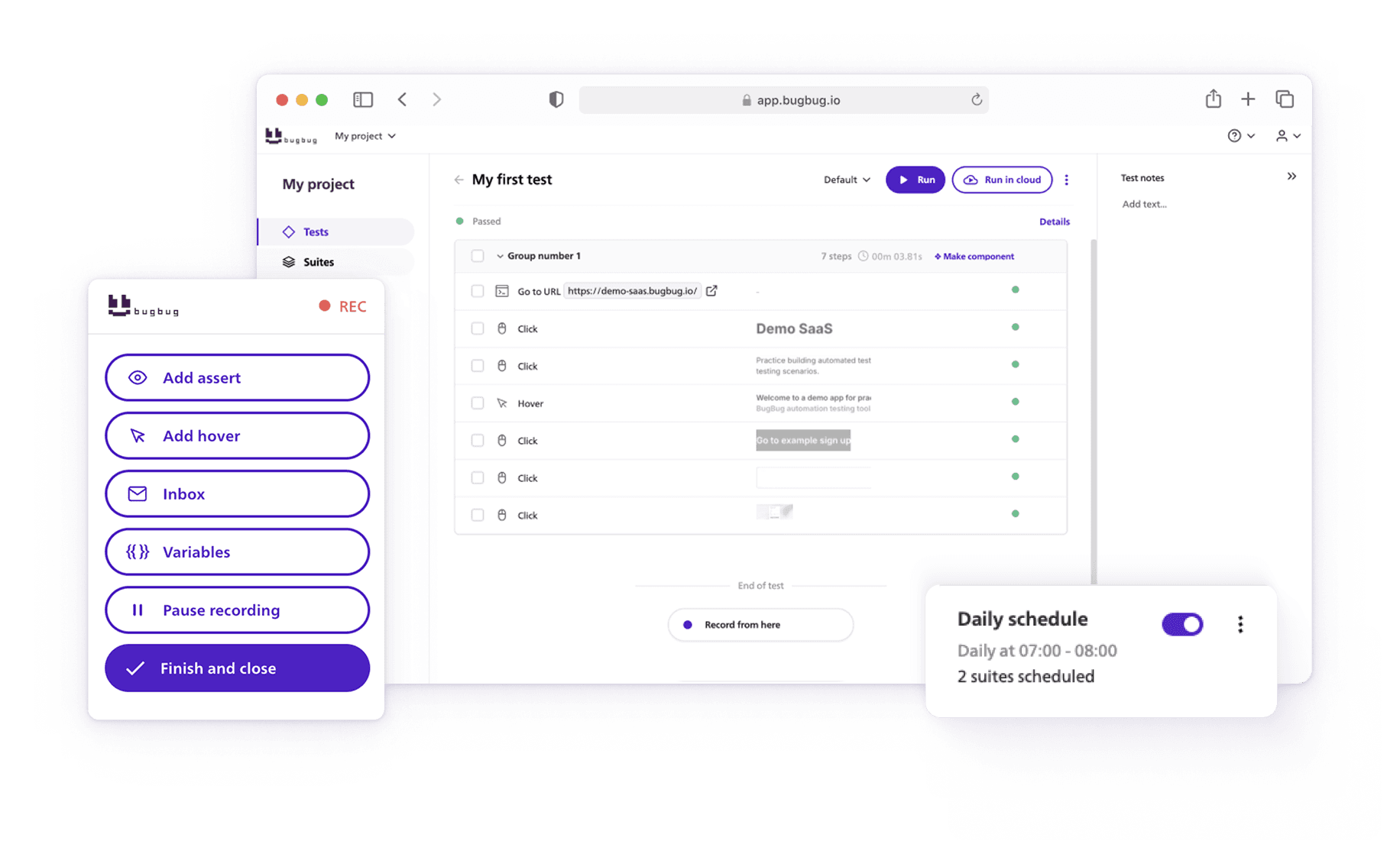

Try stable automation with Bugbug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Hidden Cost #1: Setup and Maintenance Overhead

All testing frameworks require configuration:

- Defining evaluation criteria

- Connecting data sources

- Tuning thresholds and signals

- Maintaining integrations

Test maintenance is a significant ongoing effort, as automated test maintenance is frequently a bottleneck in the code release process. This adds to the overall setup and upkeep required for AI testing frameworks.

This work doesn’t replace testing — it competes with it.

Instead of writing or fixing tests, teams end up maintaining the framework itself.

Hidden Cost #2: AI Evaluation Becomes Manual Work

AI frameworks rely heavily on human-in-the-loop workflows. Someone still has to:

- Review AI-generated tests

- Validate prioritization decisions

- Investigate why something was ignored or flagged

For small teams, this often means more manual work, not less — just redistributed into review and explanation tasks.

AI evaluation becomes a second testing layer, without clearly defined ownership.

Hidden Cost #3: Explainability Gaps Undermine Trust

Explainability is critical in testing. QA teams need to justify:

- Why a test failed

- Why a release is safe

- Why something was skipped

AI testing frameworks frequently struggle here.

When an AI model says “this test is low risk” or “this failure is probably irrelevant,” teams still need to explain that decision to developers, product managers, and stakeholders.

Some AI testing frameworks address this by offering logic insights, which mimic a senior test engineer's review and suggest improvements to test automation processes. These logic insights provide recommendations and intelligent suggestions, helping teams understand and enhance their testing strategies.

If the explanation is vague or probabilistic, trust erodes quickly — and teams revert to manual verification anyway.

The Result: More Architecture, Same Bottlenecks

This is the core issue.

Small QA teams adopt AI testing frameworks hoping to:

- Reduce manual testing

- Increase confidence

- Move faster

Instead, they often end up with:

- Another system to maintain

- The ongoing need to maintain automated tests, which remains a significant challenge even with AI frameworks

- More decisions to validate

- More tooling complexity

The bottleneck doesn’t disappear — it just moves.

AI Evaluation and Explainability: The Hard Problems Frameworks Don’t Eliminate

One of the most overlooked aspects of any AI testing framework is evaluation.

👉 Check more in: Generative AI Testing Tools - Evaluation Checklist

Traditional test automation has a clear feedback loop:

- A test passes or fails

- The failure has a traceable cause

- A human can inspect, reproduce, and explain it

AI-driven systems complicate this loop.

When AI is involved in generating tests, prioritizing execution, or suppressing failures, QA teams must now evaluate the AI itself — not just the application under test.

This introduces new questions:

- Was the AI decision correct, or just statistically likely?

- What data influenced this outcome?

- Would the same decision be made tomorrow, with slightly different inputs?

For most teams, these questions are harder to answer than a failing assertion.

Explainability Is Not Optional in QA

In testing, explainability isn’t a “nice to have.” It’s mandatory.

QA teams are expected to explain:

- Why a release is blocked

- Why a defect escaped

- Why certain tests were skipped or deprioritized

AI testing frameworks often respond to this need with dashboards, confidence scores, or probability labels. Comprehensive test management platforms can help provide traceability and clear explanations for test decisions, making it easier for teams to justify actions and outcomes throughout the test lifecycle.

But these rarely translate into explanations that non-ML stakeholders can trust.

A statement like “this test was deprioritized due to low historical risk” is not actionable unless the team can explain:

- What “risk” means in this context

- Which history was considered

- Why that history still applies after recent changes

Without strong explainability, teams fall back to manual checks — negating the supposed efficiency gains of the framework.

Human-in-the-Loop Sounds Good — Until You Have to Run It

Most AI testing frameworks promote human-in-the-loop workflows as a safety mechanism.

In theory:

- AI proposes

- Humans approve

- Quality improves

In practice:

- Humans review large volumes of AI output

- Ownership is unclear

- Review work scales faster than benefits

Some platforms address this by allowing users of varying technical skill levels to easily create, manage, and review tests, reducing the burden of manual review and making test automation more accessible.

For small QA teams, this often becomes unsustainable. Instead of reducing workload, AI introduces a new class of work: supervising automated decisions that are difficult to verify quickly.

The result is not autonomy — it’s oversight fatigue.

Do You Actually Need AI in Your Testing Stack?

Before adopting an AI testing framework, teams should pause and ask a more basic question:

Identifying the key pain points in your testing process is essential before adopting new tools.

What problem are we actually trying to solve?

In many cases, AI is applied to symptoms, not root causes.

Here are the most common misalignments.

AI testing tools can help automate complex test scenarios and generate test steps from plain-English descriptions, making it easier to address these challenges.

If Test Creation Is Slow, AI Might Help — But Only Marginally

Should you use AI in software testing? AI-generated tests can speed up initial creation, but they don’t eliminate:

- Test review

- Maintenance

- Ownership

AI-powered test generation is a feature that enhances software testing processes by automatically generating test cases based on historical data. AI-assisted test creation is now a common feature across many testing tools, allowing users to enter a plain-English description of the steps for the test, which the AI translates into a test script. These AI-powered test and test generation capabilities can also improve bug detection and integrate with CI/CD pipelines to enhance software testing efficiency.

If your team already struggles to maintain existing tests, adding automatically generated ones often increases long-term cost.

If Failures Are Noisy, AI Often Amplifies the Noise

AI frameworks promise smarter failure analysis. But if your test suite is:

- Flaky

- Poorly scoped

- Sensitive to UI churn

AI will learn from that noise and reflect it back — with added confidence.

Fixing flaky tests usually delivers more value than classifying them with AI. Reduced maintenance in AI testing frameworks can eliminate the need to manually update locators for every minor UI change, reducing maintenance effort by up to 85% and making it easier to maintain automated tests.

If Coverage Is Missing, AI Can’t Replace Product Understanding

AI can suggest where coverage might be missing, but it can’t decide:

- What matters most to users

- Which flows are business-critical

- Where risk is acceptable

However, AI-driven test case generation can help improve testing efficiency and coverage by automatically creating relevant test cases based on application behavior and changes.

Those decisions require product context, not statistical inference.

The Practical Rule of Thumb

If your biggest problems are:

- Too much manual regression

- Slow feedback in CI

- High cost of building and maintaining tests

Then an AI testing framework is probably not the answer.

Those problems are usually solved by better automation. Automation testing and the use of AI test automation tools can help teams quickly establish and sustain automated testing processes, making it easier to manage and maintain tests over time, and reducing manual effort.

What Consistently Improves QA Results (Without an AI Testing Framework)

If you strip away the AI hype, a clear pattern emerges across high-performing QA teams.

The biggest gains in software quality don’t come from sophisticated frameworks — they come from boringly effective fundamentals. Effective test management, thorough test planning, and streamlined testing workflows are key to improving software quality.

Across startups and growing product teams, the improvements that actually move the needle look like this:

Reliable Automation Over Clever Automation

Teams benefit far more from:

- Stable browser-based automated tests. Cross browser testing and cross platform testing are essential for ensuring reliability across different environments. Web testing tools can help automate user interactions and ensure compatibility for web applications.

- Clear assertions tied to user behavior

- Predictable execution in CI

than from AI-generated or AI-prioritized tests that require explanation and review.

A smaller, reliable test suite consistently outperforms a large, “intelligent” one that nobody fully trusts.

Faster Feedback Beats Smarter Feedback

One of the main promises of AI testing frameworks is better insight.

But in practice, speed matters more than sophistication.

Fast feedback allows teams to:

- Catch regressions earlier

- Debug changes while context is fresh

- Reduce the cost of fixing defects

Waiting for AI-driven analysis, prioritization, or evaluation often delays the moment when a developer simply sees a failure and fixes it.

Reduced Manual Regression Has the Highest ROI

Manual regression is where most QA teams lose time and morale.

The most effective teams focus on:

- Automating repeatable, high-confidence scenarios. End to end testing frameworks can automate comprehensive test scenarios, including complex test scenarios that improve coverage and efficiency.

- Covering critical user flows first

- Letting humans focus on exploratory and edge-case testing

This approach reduces manual workload immediately — without requiring new layers of AI decision-making.

Clear Ownership and Communication Matter More Than AI Decisions

Many problems AI frameworks try to solve are actually organizational, not technical:

- Unclear test ownership

- Poor communication between QA and development

- Constant requirement changes

No framework can compensate for these issues. But simple, accessible automation tools often improve collaboration by making tests easier to create, understand, and maintain. Platforms with an intuitive interface and low-code tools allow both technical and non-technical users to easily create and maintain tests, further improving collaboration and reducing the learning curve.

Where BugBug Fits: Achieving the Benefits Without the Framework Overhead

This is where the contrast becomes clear.

Most QA teams exploring AI testing frameworks aren’t chasing AI for its own sake. They want:

- Less manual testing

- Faster automation

- Higher confidence in releases

- Fewer bottlenecks caused by limited QA resources

BugBug Focuses on Execution, Not Orchestration

Instead of adding an AI layer on top of testing, BugBug improves the core testing workflow itself:

- Easy-to-use browser automation reduces the cost of building tests and enables teams to efficiently run tests with minimal setup

- Low learning curve minimizes context switching

- Tests remain readable and understandable by the whole team

This means teams spend time testing the product, not managing tooling.

Try no-code automation with Bugbug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Reducing Manual Testing Without Hiring or Heavy Architecture

One of the quiet promises of AI testing frameworks is scale — “do more with less.”

BugBug delivers this in a simpler way:

- QA engineers automate repetitive scenarios faster, and can create automated tests quickly, even without deep technical expertise

- Developers can contribute to automation without deep test expertise

- Teams add automated QA capacity without hiring specialists

No ML pipelines. No evaluation dashboards. No explainability problem.

Confidence Comes From Clarity, Not Prediction

BugBug doesn’t attempt to predict risk or automatically deprioritize failures.

Instead, it gives teams:

- Clear, deterministic test results — BugBug ensures the same test can be replicated and executed consistently, providing reliable results.

- Fast feedback in CI

- High confidence that critical flows still work

This kind of confidence is easier to defend, easier to explain, and easier to trust than probabilistic AI-driven decisions.

When an AI Testing Framework Might Actually Make Sense

Despite the skepticism, AI testing frameworks are not inherently useless. There are situations where they provide real value — they’re just far less common than marketing suggests. Both proprietary tools and open-source AI testing tools are available, each with their own advantages. Proprietary tools often offer dedicated support and advanced enterprise features, while open-source AI testing tools are community-driven and free, making them easily accessible to developers and organizations.

You’re Operating at Enterprise Scale

AI testing frameworks start to make sense when testing complexity is no longer human-manageable.

Typical indicators:

- Tens of thousands of automated tests

- Multiple CI pipelines running in parallel

- Dozens of teams contributing changes daily

- Hundreds of test failures per release cycle

At this scale, a robust testing infrastructure is essential for managing large-scale automation and ensuring reliability. At this level, humans can’t reasonably triage everything. AI can help group failures, detect patterns, and reduce noise — if the underlying automation is already solid.

You Have Dedicated Resources for AI Evaluation and Governance

An AI testing framework is not “set and forget.”

It requires:

- People who own AI evaluation

- Time to review and validate AI decisions

- Processes for handling false positives and false negatives

- Clear accountability when AI-driven decisions affect releases

If no one in your organization is responsible for answering “Why did the AI do this?”, the framework will quickly lose trust.

Your Test Data Is Stable, Meaningful, and Trusted

AI frameworks rely heavily on historical signals:

- Failure patterns

- Execution history

- Change frequency

If your test suite is already:

- Stable

- Low-flake

- Consistently maintained

then AI has something reliable to learn from.

If not, AI will simply automate confusion.

You’re Solving Triage and Insight — Not Core Automation

AI testing frameworks are best at meta-problems:

- Analyzing results

- Prioritizing execution

- Identifying trends

Key benefits of AI testing frameworks include logic insights and predictive analytics, which help teams focus on high-risk areas by providing intelligent suggestions similar to a senior test engineer's review. AI-powered test features such as self-healing tests that adapt to UI changes and natural language script generation are also important advantages.

They are not a substitute for:

- Good test design

- Reliable automation

- Clear product understanding

If your main challenge is still building and maintaining automated tests, an AI framework is premature.

A Useful Reality Check

A simple rule of thumb for QA leaders:

If your biggest testing problems can be solved by faster automation, clearer results, or fewer tools, you don’t need an AI testing framework yet.

At that stage, investing in better automation fundamentals will deliver far higher ROI — with less risk and less operational overhead. Effective test management, thorough test planning, and streamlined testing workflows are essential for maximizing the benefits of automation.