🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

Self-healing test automation has become one of the most polarizing topics in QA. Browse any Reddit or Slack discussion and you’ll see the same reactions over and over:

“Self-healing = five backup XPaths.” “What happens when the AI heals away a real bug?” “Great in demos — fails on real products.” “Works fine as long as your entire test suite fits on a login page.”

That skepticism isn’t cynicism — it’s experience. Many of us have been burned by tools that promised “zero maintenance automation” only to produce more chaos than they removed. Traditional automated testing relies on fixed locators and often leads to broken tests whenever the application changes, requiring significant manual intervention to update or fix scripts. In contrast, self-healing test automation aims to reduce the manual intervention needed to fix broken tests caused by changes in the application, automatically adapting to UI or code modifications. And with the current wave of AI marketing, vendors are once again promising to replace flaky selectors, brittle flows, and human oversight with “intelligent automation.”

This article cuts through the hype. We’ll look at what self-healing automation really is, how it works, what it genuinely solves, and where it introduces dangerous blind spots. We’ll also explore the broader landscape of AI in test automation — what’s working today, what isn’t — and where pragmatic platforms like BugBug fit into all this without pretending AI is a silver bullet.

Check also:

- What Self-Healing Test Automation Really Means

- How Self-Healing Locators Actually Work

- The Practical Benefits of Self-Healing Automation

- The Risks: How Self-Healing Can Break Your Tests Without You Noticing

- AI in Test Automation That Actually Works Today

- How to Evaluate AI & Self-Healing Tools Without Getting Burned

- A Realistic Roadmap for Teams Considering Self-Healing

- Where BugBug Fits in a World Full of AI Hype (A Stability-First Approach)

- BugBug Uses Stable Selectors, Not AI Guesswork

- Smart Waiting Logic Removes Timing Flakiness

- Reusable Components for Predictable Maintenance

- A Visual Test Editor That Makes Logic Explicit

- Fast, Lightweight Execution With No Healing Overhead

- No Risk of Masking Real Bugs

- A Practical Tool for Teams Who Prefer Engineering Over Hype

- Conclusion — Self-Healing Is Useful, but Only When Treated as a Tool, Not a Strategy

What Self-Healing Test Automation Really Means

When vendors talk about “self-healing tests,” the pitch sounds magical:

- “AI automatically fixes failing tests.”

- “No more flaky selectors.”

- “Zero-maintenance automation.”

But practitioners need a clearer, more honest definition:

Self-healing test automation refers to mechanisms that automatically adjust how a test identifies UI elements when the original locator stops working.

That’s it. No AI agent rewriting your test logic. No semantic understanding of the application. No ability to distinguish harmless UI tweaks from functional regressions.

And—this part is crucial—self-healing applies almost exclusively to locators, not:

- assertions

- expected values

- test flows

- business rules

- system behavior

Self-healing mechanisms typically operate at the level of individual test steps within test scripts, automatically updating how each test step locates UI elements when changes occur.

If a tool claims it can “heal” these things, treat it as a major red flag.

How Self-Healing Locators Actually Work

Most self-healing systems sound smarter than they really are. Under the hood, they usually rely on a combination of predictable mechanisms:

Element fingerprints

A “fingerprint” is a set of attributes used to identify a web element. This can include ID, name, class, XPath, ARIA attributes, and sometimes even text content or relative position in the DOM. Using multiple attributes, including secondary identifiers such as relative position, text attributes, and parent/child relationships, increases the robustness of self-healing by allowing the system to adapt when primary identifiers change or are unavailable.

Attribute weighting

Some attributes are more stable than others. For example, an element’s ID is often unique and persistent, while a class name might be shared or change frequently. Self-healing systems assign weights to each attribute to prioritize the most reliable ones.

Fallback strategies

If the primary attribute (like ID) is missing or changed, the system falls back to other attributes—such as name, XPath, or CSS selector—to try to find the element. This layered approach helps maintain test stability even as the UI evolves.

Matching after changes

When a UI is redesigned and an element’s attributes change, the self-healing mechanism compares the old fingerprint to the new DOM. It uses the weighted attributes and fallback strategies to find the closest match. This way, the system can reliably identify the target element by leveraging these multiple attributes and fallback strategies, even after significant UI updates.

Multi-attribute element “fingerprints”

The tool tracks an element using a bundle of attributes:

- ID

- text content

- CSS classes

- ARIA labels

- DOM ancestry

- relative position

- sometimes visual features

Similarity scoring

When a locator fails, the system searches the DOM:

“Which current element looks most like the element this test previously interacted with?”

Fallback strategies

Common approaches include:

- ID → fallback to text

- text → fallback to CSS

- CSS → fallback to relative position

- or even → visual similarity scoring

A simple example

Your button starts as:

<button id="add-to-cart">

After a redesign:

<button id="add-item" class="btn btn-primary">Add item</button>

The system finds the new element by recognizing:

- similar text

- similar role

- similar styling

- similar location in the DOM

Where this works well

This type of healing genuinely helps when:

- UI layouts shift

- classes/IDs change without affecting behavior

- component library refactors occur

But its usefulness is limited to a narrow slice of UI automation problems.

The Practical Benefits of Self-Healing Automation

Despite the skepticism, self-healing can provide real value — but only in specific, controlled scenarios. By automatically adapting to changes in the application, self-healing test automation reduces test maintenance efforts and manual effort, making it easier for teams to keep tests up to date.

Reduced maintenance during UI churn

When the UI changes frequently, self-healing can automatically repair broken locators, minimizing the need for manual updates. This not only saves time but also helps prevent unnecessary test failures and improves test reliability.

Less noise in test runs

By adapting to minor changes, self-healing reduces false positives and minimizes test run noise. This leads to more accurate test results and greater test accuracy, ensuring that teams can trust their automated testing outcomes.

Faster maintenance workflows

With fewer broken tests to fix, teams can spend less time on maintenance and more time on building new tests. These efficiencies contribute to smooth test execution, effective test execution, and help ensure test continuity even as the application evolves.

By reducing maintenance and adapting to changes, self-healing automation improves test coverage and allows teams to focus on expanding their testing scope.

Reduced maintenance during UI churn

Harmless UI updates often break dozens of tests. Self-healing can prevent these failures from polluting CI pipelines.

Less noise in test runs

If every DOM tweak triggers a cascade of failures, teams lose productivity. Self-healing can filter out the noise.

Faster maintenance workflows

When changes are logged and reviewable, engineers can quickly confirm valid updates rather than repairing every selector manually.

But these benefits only matter if the tool stays within safe boundaries.

Try stable automation with Bugbug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

The Risks: How Self-Healing Can Break Your Tests Without You Noticing

Experienced testers aren’t skeptical because they dislike innovation. They’re skeptical because the risks are real. One of the common test automation lies is the belief that self-healing can eliminate all test failures and flaky tests without any human intervention.

Silent false positives (the most dangerous outcome)

Imagine:

- The “Pay now” button disappears due to a regression.

- The self-healing mechanism selects a visually similar but incorrect button.

- The test passes.

- The bug ships to production.

This is why many QA engineers prefer a red test over a falsely green one.

AI becomes a new source of flakiness

Tools may:

- generate locator updates when nothing was broken

- behave differently between runs

- break downstream assertions unintentionally

You end up debugging the healing algorithm instead of debugging the product.

Loss of trust across the team

Once a suite is known to “lie,” engineers stop trusting automation.

A green build becomes meaningless.

Risks multiply in complex apps

Self-healing performs poorly in:

- RBAC-heavy systems

- dynamic dashboards

- multi-step transactional flows

- heavily conditional UIs

The more context needed to identify an element, the more self-healing struggles.

AI in Test Automation That Actually Works Today

The most productive uses of AI in QA have almost nothing to do with self-healing. While machine learning and machine learning algorithms are increasingly integrated into test automation frameworks, their most reliable applications today are in areas like code generation and data analysis, rather than fully autonomous self-healing.

AI-assisted coding

Teams report massive gains using tools like Claude, Cursor, and Copilot for:

- generating Playwright/Cypress scaffolding

- converting tests between frameworks

- refactoring existing suites

- producing boilerplate faster

AI acts like a fast, supervised junior developer — and that is genuinely valuable.

Test data generation

AI shines at:

- generating realistic test accounts

- mutating JSON payloads

- providing edge-case data combinations

This often increases coverage significantly.

Log and failure analysis

AI can:

- cluster similar failures

- identify flaky patterns

- surface likely root causes

This reduces triage time and test fatigue.

Natural language → structured test drafts

Useful with human review — not as autonomous test agents.

Where AI still falls short

- autonomous test maintenance

- adapting to complex UI state

- understanding real business rules

- replacing engineering judgment

These remain open research problems, not solved product features.

How to Evaluate AI & Self-Healing Tools Without Getting Burned

Use this framework when assessing any vendor claiming self-healing or AI test automation. A robust testing tool should offer self healing capabilities as part of its automation tools. Automated testing tools with a well-designed self healing system and a transparent self healing process are more likely to deliver reliable results.

Ask exactly what the tool is allowed to heal

Safe: selectors only

Dangerous:

- modifying assertions

- updating expected values

- reordering test steps

- masking failures automatically

Require transparency and version control

A safe tool must:

- log every healing attempt

- show diffs of locator changes

- require human approval

- avoid silent auto-updates

Test it on your most complex flows

Don’t rely on the vendor’s demo.

Run it on:

- checkout flows

- onboarding steps

- dashboard interactions

- RBAC-specific screens

Measure:

- reduction in false failures

- increase in false positives

- time spent reviewing changes

Watch for red flags

Avoid any tool that promises:

- “zero maintenance automation”

- “AI replaces QA”

- “fully autonomous test agents”

- no disable switch

- no audit trail

A Realistic Roadmap for Teams Considering Self-Healing

You should not introduce self-healing into an unstable automation stack.

Here’s the roadmap that actually works. A successful approach starts with careful initial test design and close collaboration between the development team and QA teams to ensure robust test creation and effective test scenarios that can adapt to changes.

Step 1 — Fix the fundamentals first

Self-healing cannot compensate for:

- poor locator strategy

- missing test IDs

- brittle assertions

- tightly coupled test logic

- inconsistent test architecture

Make the suite structurally healthy first.

Step 2 — Add deterministic resilience before AI

Implement:

- smart waits

- reusable components

- stable selectors

- layered abstraction (Page Objects / Screenplay)

This reduces most flakiness without needing AI.

Step 3 — Use AI where it delivers real ROI

Such as:

- code generation

- data creation

- failure clustering

- documentation → test drafts

This augments human engineers rather than replacing them.

Step 4 — Introduce self-healing in a controlled manner

If you implement self-healing:

- use suggestion mode

- require human approval

- never allow assertion changes

- monitor for sudden increases in pass rates

Self-healing should be an optimization — not a crutch.

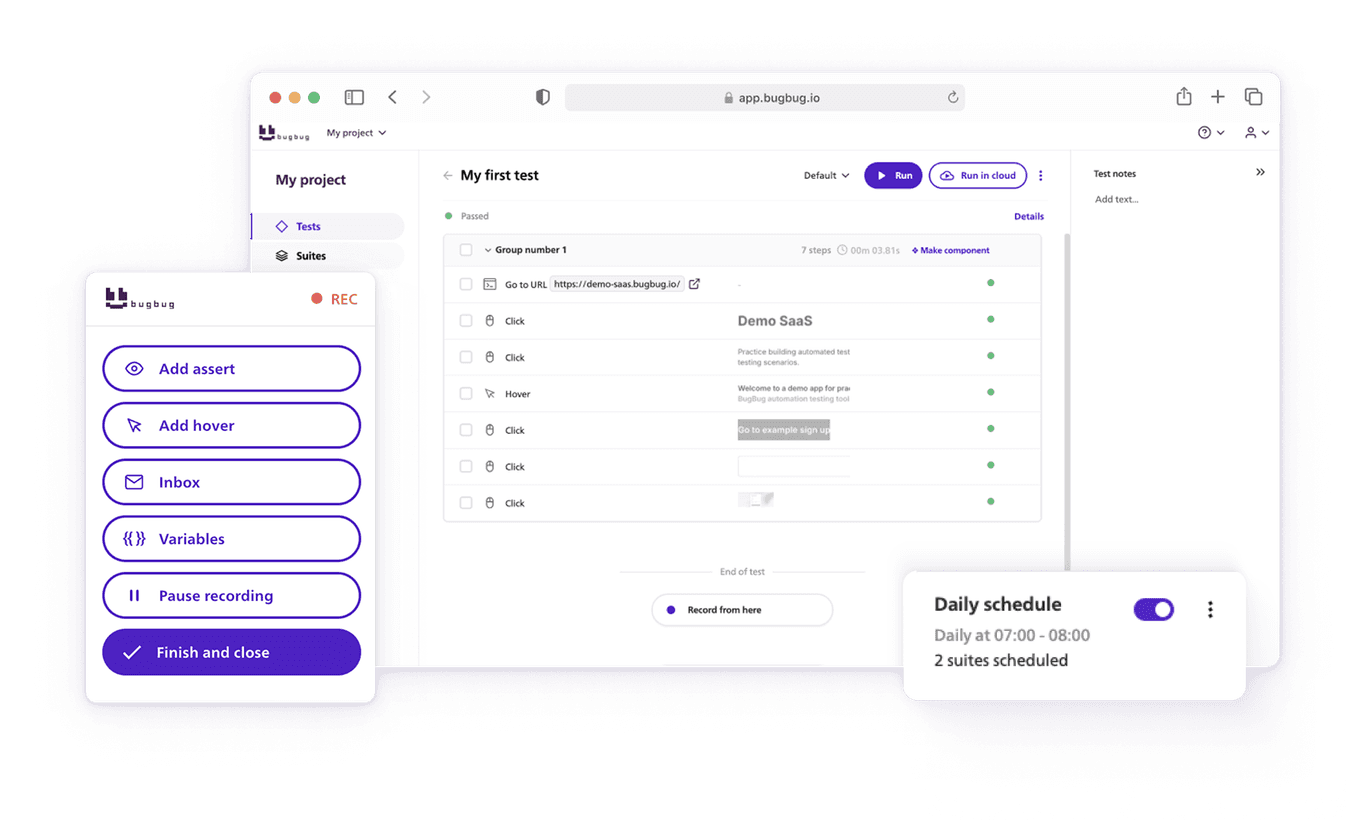

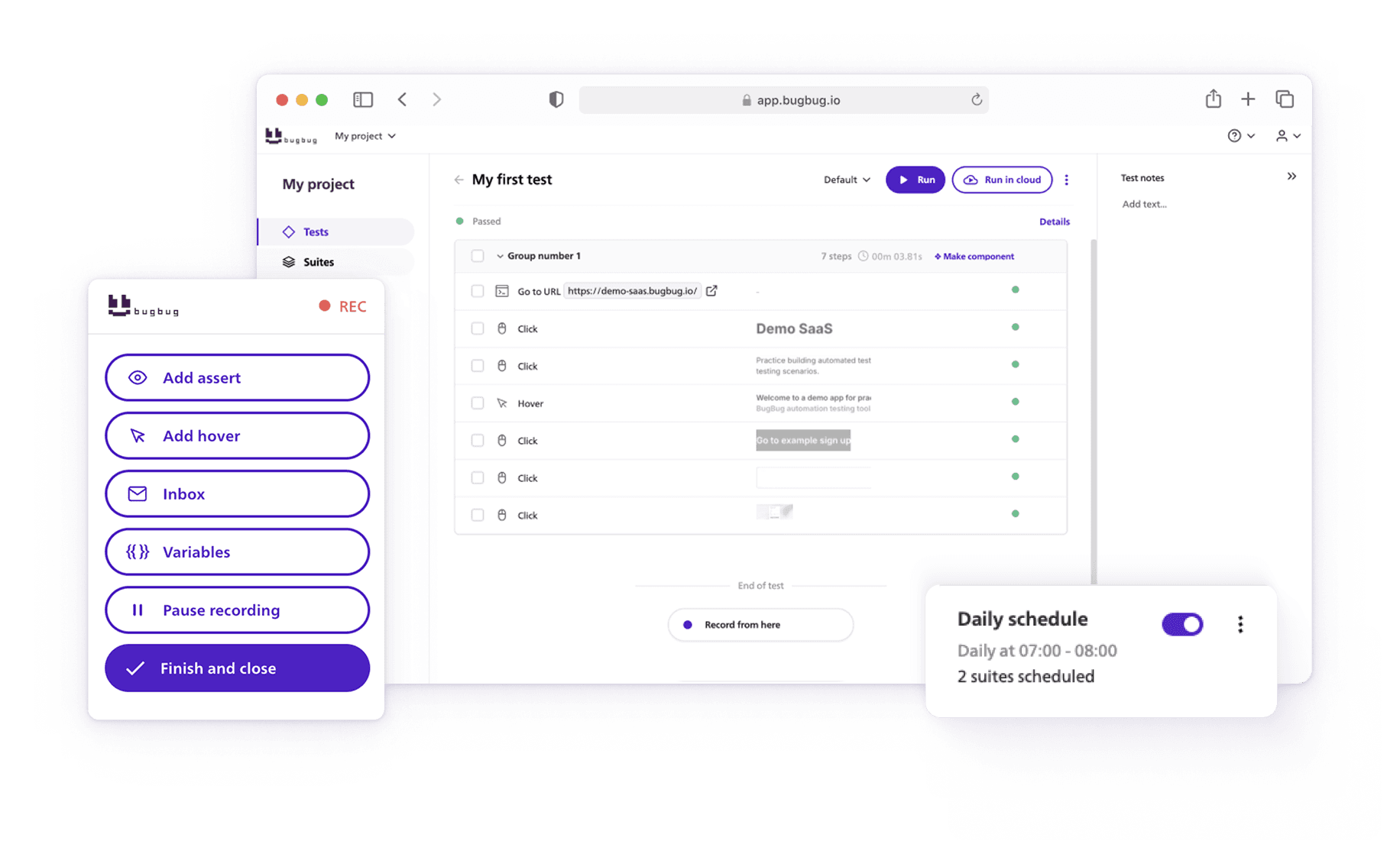

Where BugBug Fits in a World Full of AI Hype (A Stability-First Approach)

The testing industry doesn’t need more AI promises. It needs tools that make automation reliable. Unlike traditional automation, traditional automation tools, and traditional test automation—which often rely on static locators and require constant manual updates—BugBug focuses on building robust testing software from the ground up. BugBug takes a very different approach from vendors selling “self-healing” or autonomous test agents: instead of trying to patch over unstable tests with inference and fuzzy logic, it focuses on removing instability at the source.

The philosophy is simple:

Build tests that are clear, repeatable, and stable by design — so you don’t need self-healing in the first place.

Here’s how BugBug streamlines automation without resorting to AI magic.

Automate your tests with BugBug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

BugBug Uses Stable Selectors, Not AI Guesswork

Most flaky UI tests fail because selectors are brittle. BugBug eliminates this by prioritizing:

- clean, deterministic selectors

- no brittle auto-generated XPaths

- guidance toward stable element identification patterns

This prevents locator churn rather than repairing it after the fact.

Smart Waiting Logic Removes Timing Flakiness

BugBug automatically handles real-world timing issues with:

- intelligent wait conditions

- synchronization with SPA behavior

- no need for manual sleeps or retry hacks

This addresses one of the biggest causes of flakiness.

Reusable Components for Predictable Maintenance

Teams can create reusable flows (login, checkout, navigation) and update them once to propagate changes everywhere.

This is true maintainability, achieved through engineering — not healing heuristics.

A Visual Test Editor That Makes Logic Explicit

BugBug avoids the “black-box” problem entirely:

- every step is visible

- selectors are transparent

- flows are easy to inspect and modify

Nothing happens behind your back.

Fast, Lightweight Execution With No Healing Overhead

Because BugBug does not run inference layers, DOM scoring, or AI retries, execution is:

- fast locally

- fast in CI

- fast in the cloud

Speed comes from deterministic design, not shortcuts.

No Risk of Masking Real Bugs

BugBug never rewrites:

- selectors

- assertions

- expected values

- test logic

Nothing is “healed” automatically.

Nothing hides regressions.

A Practical Tool for Teams Who Prefer Engineering Over Hype

BugBug doesn’t claim:

- autonomous testing

- zero maintenance

- AI replacing QA

Instead, it focuses on:

- stable selectors

- smart waits

- modular test design

- transparency

- predictable execution

These are the fundamentals that eliminate most flakiness before AI ever enters the picture.

By prioritizing reliability and clarity over hype, BugBug helps teams build automation that works consistently in production — not just in vendor demos.

Conclusion — Self-Healing Is Useful, but Only When Treated as a Tool, Not a Strategy

The truth without marketing gloss:

- Self-healing solves a narrow problem: locator churn.

- It does not solve conceptual flakiness, timing issues, or poor test architecture.

- It can easily hide real bugs if left unsupervised.

- AI is far more valuable today as a coding assistant, data generator, and analysis helper.

- Stable automation comes from engineering fundamentals — not autonomous heuristics.

- Tools like BugBug succeed because they prioritize reliability, transparency, and maintainability.

If you want a testing strategy that scales, build stable tests first, then adopt AI tools intentionally — not blindly.

Happy (automated) testing