🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

This guide breaks down how AI really fits into software testing — what works, what’s hype, and how you can adopt AI-enhanced workflows without adding unnecessary complexity.

🎯 TL;DR - How to Use AI in Automation Testing?

- AI augments (not replaces) QA across the lifecycle — generating tests from specs/logs, stabilizing execution with self-healing locators, and surfacing smarter insights post-run via predictive analytics.

- Coverage and speed jump as AI prioritizes high-risk areas, trims redundant suites, and enables large-scale parallel testing and cross-platform runs with realistic, data-driven paths.

- Real gains vs. hype: evaluate tools on true learning/adaptation, transparency, and ROI; many “AI” claims are rule-based heuristics that won’t reduce maintenance on repetitive testing tasks or complex scenarios.

- Adopt incrementally: start where data is reliable (visual/self-healing, flakiness prediction), wire into CI/CD, monitor drift over builds, and keep human-led exploratory and accessibility testing in the loop.

- Practical path with BugBug: no-code UI automation, automatic selector validation, active waiting, and scheduling deliver AI-level stability and speed—without heavy ML setup—while pairing well with tools like k6/JMeter for performance and load testing.

- 🎯 TL;DR - How to Use AI in Automation Testing?

- Understanding Generative AI in Automation Testing

- How AI Actually Works in Testing

- Benefits of AI in Software Testing

- Common Use Cases for AI in QA

- Manual vs AI-Driven Testing — Finding the Right Balance

- AI-Driven Test Automation: The New Era of Smart Testing Workflows

- Challenges and Limitations of AI in Software Testing

- Future Trends in AI-Driven Testing

- Where BugBug Fits In

- Conclusion — Augment, Don’t Replace

- FAQ - AI in Software Testing

Check also:

Software testing has entered a new era. As applications grow in complexity and teams face the pressure of continuous delivery, traditional testing methods — even automated ones — are struggling to keep pace.

AI automation testing tools, powered by artificial intelligence, are now reshaping how QA engineers approach quality assurance. By leveraging artificial intelligence, these platforms automate test case creation, optimize execution, and enhance coverage, becoming a crucial component of modern testing strategies.

Understanding Generative AI in Automation Testing

AI in software testing uses machine learning (ML), natural language processing (NLP), and predictive analytics to improve test design, execution, and maintenance. An innovative ai tool leverages artificial intelligence to automate and enhance various testing processes, such as unit test generation and UI test creation, making software testing more efficient, resilient, and accessible to users with different technical backgrounds.

It’s essential to distinguish between:

- AI Testing: Testing AI-driven systems like ML models or chatbots.

- AI-Powered Testing: Using AI to test any application more effectively — this is where most QA teams focus.

AI-powered testing enables teams to analyze massive volumes of logs, commit data, and defect patterns to find gaps humans might overlook. The goal isn’t to replace testers — it’s to let them work smarter, not harder.

How AI Actually Works in Testing

AI doesn’t replace your existing automation framework — it makes it smarter. Instead of manually defining every test, waiting for execution results, and analyzing logs, AI learns from patterns in your data and adapts testing dynamically. By automating repetitive tasks, AI frees up QA engineers to focus on more strategic and complex testing activities. It enhances every stage of the testing lifecycle.

Planning — Intelligent Test Design

AI starts by learning from your product’s history: requirements, user stories, bug reports, and commit logs. Using Natural Language Processing (NLP), AI models can read plain-English specifications and automatically suggest or generate corresponding test cases. AI can also simplify test scripting by enabling the creation of scripts in natural language, making it easier for users to create, maintain, and adapt automated tests without traditional coding. They also analyze historical test coverage to highlight missing edge cases or redundant ones.

For instance, an AI system can detect that login-related tests haven’t covered password reset flows, or that a specific API endpoint is being tested redundantly. This ensures comprehensive, risk-based coverage without bloating your test suite.

Execution — Adaptive and Self-Healing Automation

During execution, AI helps stabilize tests by learning how the application behaves over time. AI also optimizes test execution by automating the running and analysis of test cases, making the process more efficient and reliable.

Traditional tests fail when a button ID or CSS selector changes. With AI-powered automation, the framework recognizes altered elements by analyzing visual patterns, DOM structures, or context rather than hardcoded locators.

This is known as self-healing automation — where tests fix themselves automatically, reducing the constant maintenance that QA engineers face. Some tools also use machine learning to predict test flakiness, allowing you to prioritize stable tests for CI/CD pipelines.

Analysis — Smarter Insights and Continuous Optimization

Once tests are executed, AI doesn’t just report failures — it learns from them. By correlating logs, commits, and historical bug data, AI can pinpoint which modules or commits are most likely responsible for recurring issues. Predictive analytics can also forecast future failures, helping QA teams address them proactively.

For example, AI-driven tools like Mabl and Testim analyze past executions to decide which tests are most relevant for the next build. They dynamically skip redundant tests, run high-risk ones first, and provide confidence scores for release decisions. By leveraging these AI-driven methods, teams can significantly improve test accuracy, ensuring more precise and reliable test results. This means fewer wasted runs, faster pipelines, and data-driven quality decisions.

Benefits of AI in Software Testing

AI testing tools go beyond simple automation. AI powered testing tools automate testing processes, improve accuracy, and make testing more accessible through features like natural language test creation and machine learning. They provide continuous intelligence, helping teams test smarter, not harder. Let’s look at how these benefits translate into real-world QA impact.

Automatic Test Generation

AI reduces the need for manual test authoring by generating test cases directly from user stories, behavior logs, or design documentation. This accelerates onboarding for new features and ensures consistent test coverage even when requirements change frequently.

Reduced Test Maintenance with Self-Healing

Locator updates, timing issues, and flaky tests are major time sinks in traditional automation. AI detects when the DOM or UI changes and automatically updates selectors, creating self healing tests that adapt to changes in the application.

Over time, this results in a living test suite — one that evolves with the product, not against it.

Improved Testing Efficiency

AI prioritizes the most relevant tests based on recent code changes and defect probability. By leveraging automated testing, the process is streamlined, reducing manual effort and increasing overall efficiency. Instead of running an entire suite, it selects the subset that provides the highest risk coverage — leading to shorter pipelines and quicker feedback for developers.

Enhanced Accessibility and Visual Testing

AI-driven visual comparison tools can spot subtle layout, color, or contrast issues that traditional assertions miss. UI testing plays a crucial role in verifying the quality and consistency of user interfaces across platforms, helping to detect UI issues early. This is particularly useful for accessibility testing, ensuring your product is compliant with WCAG and usability standards.

Broader Coverage Through Predictive and Parallel Execution

By learning from code history and production usage data, AI can simulate real user behaviors and create test paths humans might overlook. Combined with intelligent test scheduling, it enables massive parallel execution—also known as parallel testing—which allows multiple tests to be executed simultaneously across different environments such as browsers, operating systems, and devices, with minimal redundancy.

The result? Testing that’s not just faster but smarter, adaptive, and continuously improving — ideal for agile teams running rapid release cycles.

Common Use Cases for AI in QA

AI’s biggest strength lies in its versatility. It can be integrated at nearly every level of the testing process — from design and execution to maintenance and reporting. By incorporating AI-driven testing, a software development team can streamline workflows, accelerate testing cycles, and improve overall product quality. Here’s how forward-thinking QA teams are putting it to work.

AI for Test Case Generation

AI-powered systems analyze user stories, design specs, or even production logs to automatically suggest test cases that mirror real-world usage. This automated generation of test cases is a core component of software test automation, making the process faster and more reliable compared to manual efforts.

For example, if an e-commerce app frequently logs “add-to-cart” events from mobile users, AI can infer test flows for those scenarios.

This ensures test coverage reflects actual customer behavior, not just theoretical requirements.

Self-Healing Test Automation

One of the most practical applications of AI. When the application UI changes, AI identifies new element paths through context — like visual clues or DOM proximity — and updates the test automatically. This makes it much easier to maintain automated tests, ensuring they remain reliable and effective even as the application evolves.

This drastically reduces maintenance time, allowing QA engineers to focus on exploratory and strategic testing instead of constantly repairing broken selectors.

Predictive Defect Analysis

By combining version control data, bug history, and test results, AI predicts which areas of the application are most likely to fail after a new release, helping to reduce test failures by proactively addressing high-risk areas. For instance, if past bugs often appear after API updates, the system can automatically increase testing depth around API endpoints. This risk-based testing approach makes QA more proactive than reactive.

AI-Powered Visual Testing

Visual regression testing can now go beyond pixel-to-pixel comparison. Tools like Applitools leverage computer vision and deep learning to detect semantic differences — layout shifts, color mismatches, or truncated text — across devices and browsers.

This improves confidence in UI stability and reduces false positives.

Smarter Continuous Testing in CI/CD

Modern DevOps pipelines demand instant, accurate feedback. AI-driven platforms such as Functionize, Mabl, or Testim dynamically choose which automated tests to run based on recent commits, historical data, and feature usage frequency. Instead of running all regression tests on every build, AI ensures the most relevant automated tests execute first — achieving a balance between speed and risk coverage.

Together, these applications illustrate why AI has become essential in software testing. It transforms testing from a manual safety net into a learning, adaptive system that scales with the product and the team.

Manual vs AI-Driven Testing — Finding the Right Balance

AI is powerful, but human intuition remains irreplaceable. The best QA strategies use both. Manual testing, however, is prone to human error, which AI-driven approaches help to eliminate for more consistent and accurate results.

| Aspect | Manual Testing | AI-Driven Testing |

|---|---|---|

| Speed | Slower | Continuous and rapid |

| Coverage | Limited | Broad and data-driven |

| Maintenance | High | Self-healing automation |

| Exploratory Insight | Strong | Minimal |

| Scalability | Limited by people | Easily scales with data |

When to use AI testing: repetitive regression, risk-based prioritization, or high-volume test runs. When to stay manual: usability testing, exploratory sessions, or complex user flows.

AI-Driven Test Automation: The New Era of Smart Testing Workflows

The software testing industry is in the middle of a major transformation. What once relied heavily on manual testers and repetitive scripting is now shifting toward AI-powered software testing — systems that learn, adapt, and optimize over time. An ai testing tool offers dynamic test case generation, self-healing automation, and intelligent test execution, setting it apart from traditional manual testing tools.

From Traditional Automation to AI-Driven Testing

In traditional automated testing, QA engineers manually design test scripts, define test data, and maintain them as the product evolves. While this approach brought speed compared to manual testing, it also introduced a heavy maintenance burden. Traditional automated testing often faces challenges such as slow test execution and difficult maintenance, highlighting its limitations compared to newer methods.

AI has changed that equation. With AI-driven test automation, tools can now automatically generate test cases, simulate user behaviors, and adjust test steps dynamically when the UI changes. This reduces manual effort while keeping accuracy high, especially in complex testing scenarios where traditional frameworks struggle.

By leveraging machine learning algorithms, AI tools can analyze historical bug data, past releases, and defect patterns to identify high-risk test cases. This ensures your testing efforts are focused where they’ll have the greatest impact.

Intelligent Test Case Generation with Generative AI

One of the most promising areas in modern QA is generative AI testing tools. These platforms use Generative AI models trained on real-world testing data to speed up the test creation process.

Here’s how they help:

- Intelligent test case generation: Automatically creating meaningful test scenarios based on requirements or user stories.

- Test data generation: Producing realistic datasets that reflect production behavior without exposing sensitive information.

- AI-powered test generation: Suggesting alternative test flows or edge cases human testers might overlook.

This type of AI-powered testing tool doesn’t just automate — it augments the thinking process of QA engineers. It bridges software development and software testing processes, ensuring both stay aligned through each sprint.

Challenges and Limitations of AI in Software Testing

AI is changing the way teams think about quality assurance, but it’s far from a magic bullet. In practice, even the most advanced AI testing platforms come with trade-offs — technical, organizational, and financial. Traditional testing processes often require significant manual intervention, leading to delays and inconsistencies that AI-driven automation aims to reduce. Understanding these limitations helps QA teams adopt AI responsibly and avoid being blindsided by overpromised capabilities.

1. Data Dependency — The Foundation That Can Break Everything

AI thrives on data. The more high-quality examples it learns from, the more accurate its predictions and automation become. Unfortunately, many QA teams simply don’t have that data.

If your defect logs are inconsistent, your test cases are poorly tagged, or your codebase has limited historical traceability, AI models may produce weak or misleading results.

For instance, an AI defect prediction tool trained on clean enterprise data might underperform dramatically in a startup environment where issue tracking is less structured.

Solution: Start small. Use AI in areas with reliable data — such as UI automation or test maintenance — and gradually expand as your datasets mature.

2. Explainability — When AI Becomes a Black Box

One of AI’s biggest weaknesses is that it can make predictions (like “this test is likely to fail”) without explaining why. For QA engineers and developers, that’s a serious problem.

Testing is about evidence and traceability — and if AI can’t justify its conclusions, it’s hard to trust them in production decisions.

Imagine your AI system deprioritizes 30% of regression tests without clear reasoning. You might save time, but you also risk missing critical bugs.

Solution: Favor tools that provide transparent reasoning or confidence scores, and always validate AI output with human oversight. Treat AI’s suggestions as guidance, not gospel.

3. Domain Adaptation — No One-Size-Fits-All Model

AI models trained on generic datasets don’t automatically perform well in every domain. Testing a healthcare platform, for example, is vastly different from testing a fintech dashboard or a gaming application.

Each has unique user behavior patterns, APIs, data structures, and compliance requirements — meaning an AI trained in one context might misinterpret signals in another.

Solution: Choose tools that support retraining or customization. The best AI testing platforms allow you to feed domain-specific data (e.g., logs, UI layouts, or error types) to fine-tune the model to your environment.

4. Cost and Complexity — The Reality Behind “Smart” Tools

While many AI tools promise efficiency, the setup can be complex and costly. You often need additional infrastructure, dedicated training time, and engineers with ML experience to manage or interpret results.

For small QA teams or startups, that’s a non-starter. You might spend more time managing the AI than running tests.

Even simple use cases, like AI-based visual testing, can balloon in complexity if they require proprietary cloud services or expensive per-test pricing.

Solution: Adopt AI incrementally and measure ROI after each phase. Many teams find that lightweight, no-code automation tools (like BugBug) deliver 80% of the value with a fraction of the effort.

5. AI Hype — Separating Marketing from Reality

AI is a hot keyword — and many vendors are quick to use it even when their “intelligence” is just rule-based scripting. These tools may claim self-healing capabilities or predictive defect detection but actually rely on static heuristics like “retry three times before failing” or “update selector if similar element exists.”

This isn’t true AI — it’s just clever automation wrapped in buzzwords. Many of these marketing claims are software testing lies, spreading misconceptions that can hinder the adoption of genuine AI-driven testing technologies. Solution: Look beyond marketing claims. Evaluate tools based on their actual learning mechanisms, transparency, and results over time. Ask vendors how their model improves with data and what real-world metrics it’s optimized for.

Future Trends in AI-Driven Testing

Looking ahead, we’ll see even deeper AI integration into QA:

- Predictive, risk-based testing that continuously adjusts coverage and uses AI to optimize test coverage by prioritizing high-risk areas.

- AI-assisted exploratory testing, guiding testers toward likely problem areas.

- Autonomous testing agents that generate, run, and adapt tests independently.

- Data-driven quality analytics feeding back into product decisions.

The role of QA engineers will shift toward AI supervision and strategy — designing better test logic, validating predictions, and managing automated insights**.**

👉 Check also our article on AI Driven Testing Tools

Where BugBug Fits In

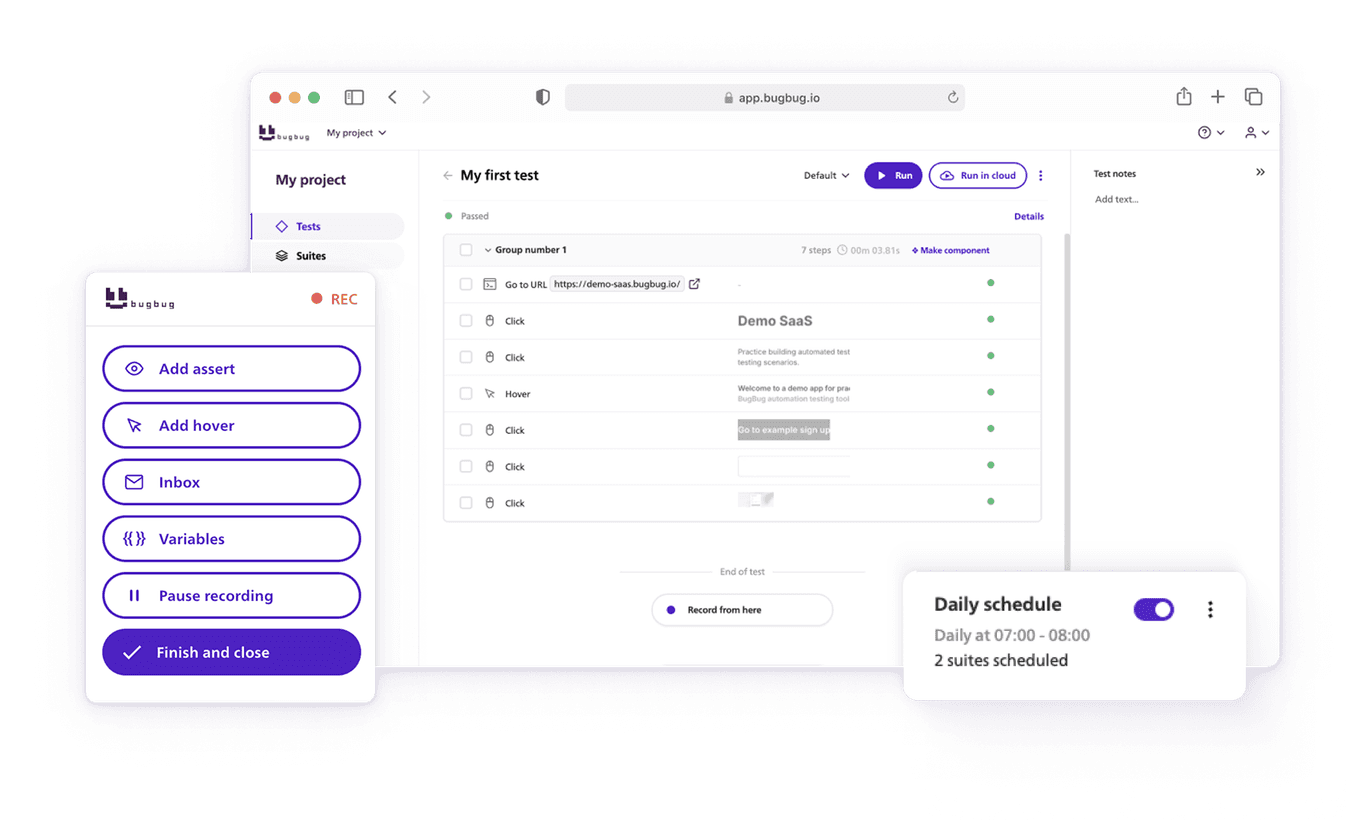

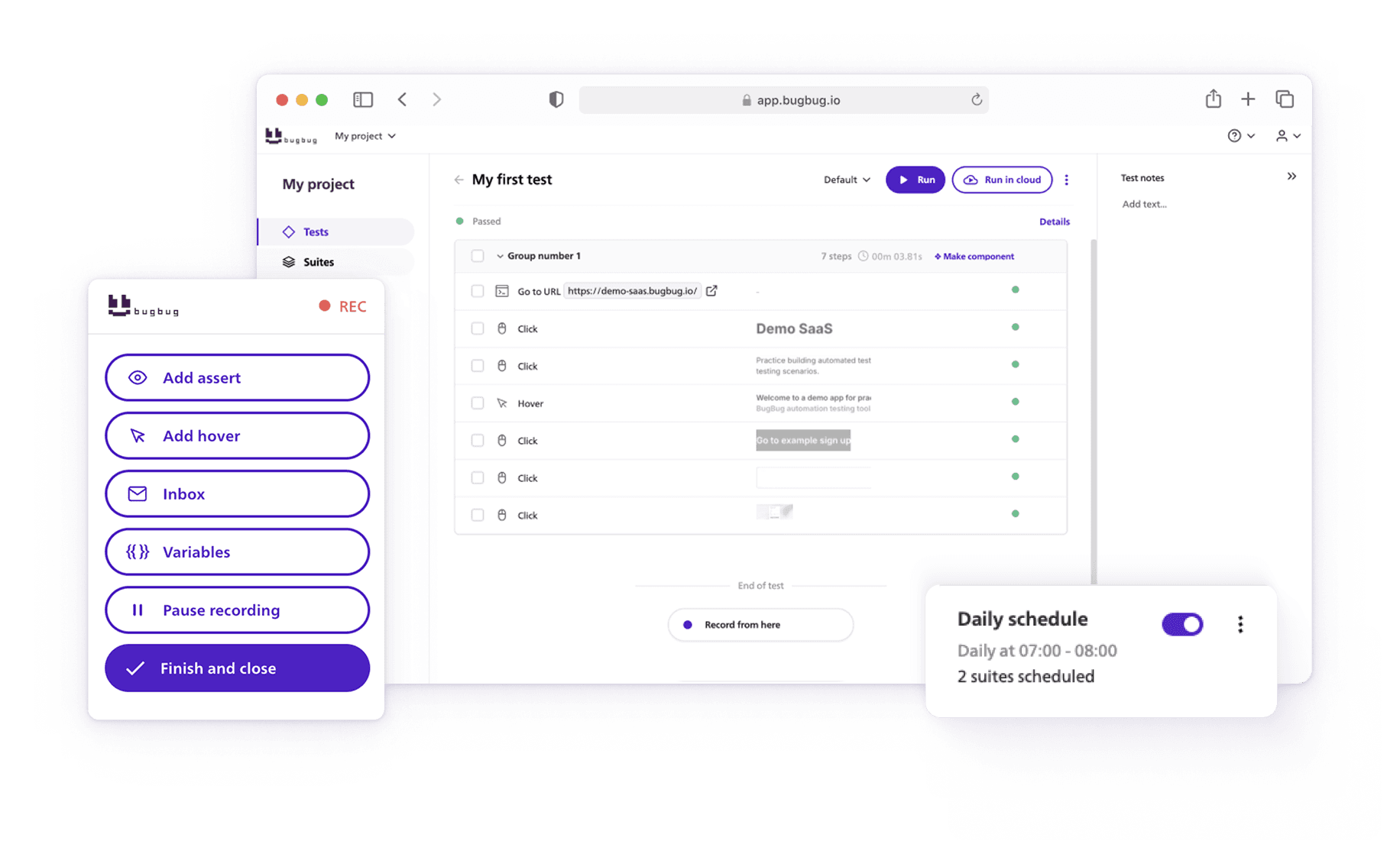

The market is flooded with “AI testing” platforms promising full autonomy — but often at the cost of usability and transparency. BugBug takes a more practical route.

While tools like Testim, Applitools, Mabl, and Functionize push the boundaries of AI-powered automation in 2025, some of these platforms also offer advanced performance and load testing, including integrated load testing scenarios, as part of their feature set. BugBug focuses on delivering the same efficiency and stability through simplicity.

BugBug’s no-code interface, automatic selector validation, and active waiting mechanism achieve the same outcomes AI promises — faster, more stable tests with minimal maintenance — without requiring complex AI setup or data modeling.

In other words, BugBug bridges the gap between AI ambition and practical automation. It gives QA teams AI-level performance while staying accessible to startups, developers, and small QA teams that need speed and reliability above all else.

By focusing on usability instead of hype, BugBug proves that effective automation doesn’t always depend on machine learning — sometimes, it’s about smart engineering.

Conclusion — Augment, Don’t Replace

AI testing tools have become indispensable in 2025. They automate test generation, self-heal failing scripts, and optimize coverage — saving teams time while improving quality.

But success lies in balance: AI enhances human testers; it doesn’t replace them. The real opportunity is in using AI responsibly — to reduce manual toil, guide attention, and make better decisions about what to test.

And for teams that value simplicity, BugBug offers a human-first automation experience that delivers the benefits of AI-driven testing — reliability, adaptability, and speed — without the complexity or overhyped promises.