🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

- 🎯 TL;DR - Generative AI Tools Evaluation Checklist

- Why Evaluating AI Test Automation Tools Is Risky Right Now

- How to Use This Checklist to Eliminate Bad-Fit Vendors Early

- What Does “AI” Actually Mean in This Tool?

- Test Creation & Readability at Scale

- Maintenance, Flakiness & False Confidence

- Debugging, Reporting & CI/CD Trust

- Integration, Workflow Fit & Team Scalability

- Cost, Lock-In & Long-Term Ownership Risks

- How to Compare AI Test Automation Tools Without Falling for Hype

- Where BugBug Fits as a Low-Risk Choice

- Final Takeaway — AI Should Reduce Testing Risk, Not Increase It

Choosing Generative AI testing tools in 2026 feels deceptively simple. Every vendor promises self-healing tests, AI-driven insights, and zero maintenance. Yet teams who’ve lived through failed rollouts know the truth: AI can reduce testing risk—or quietly multiply it.

This article is not another list of the best AI automation tools. It’s a vendor evaluation checklist designed for teams who already understand testing fundamentals and want to avoid expensive, hard-to-reverse mistakes.

If you want the strategic backdrop before diving into vendor questions, revisit “AI Testing Tools: Revolution or Buzzword?”. What follows is the practical, uncomfortable part: what to ask before you sign anything.

🎯 TL;DR - Generative AI Tools Evaluation Checklist

- “AI-powered” testing tools vary wildly — many are black boxes optimized for demos, not real CI/CD pressure, and can increase risk if their behavior isn’t transparent or explainable.

- The biggest danger isn’t missing AI, it’s overcommitting too early — once your tests, pipelines, and reports depend on opaque AI behavior, switching tools becomes costly and painful.

- Use vendor questions as a risk filter, not a feature checklist — vague answers around AI transparency, debugging, flakiness, or CI trust are often deal-breakers.

- Maintainability and debuggability matter more than speed — self-healing, no-code, and generative tests can trade short-term velocity for long-term opacity and false confidence.

- The safest tools let AI assist, not override, testers — predictable behavior, readable tests, easy exports, and human control reduce lock-in and keep QA trustworthy over time.

Also check:

Why Evaluating AI Test Automation Tools Is Risky Right Now

AI entered software testing faster than most QA processes could adapt.

The result:

- Tools branded as “AI-powered” with radically different meanings

- Black-box automation that hides failures instead of explaining them

- Platforms optimized for demos, not real CI/CD pressure

For small and mid-sized teams especially, the biggest risk isn’t missing out on AI—it’s overcommitting to it too early. While manual testing and manual testing efforts have long been the foundation of QA, they are often time-consuming and resource-intensive; generative AI testing tools promise to reduce these manual testing efforts by automating repetitive tasks and increasing efficiency, but relying too heavily on unproven AI solutions can introduce new risks. Once your test suite, pipelines, and reporting depend on opaque behavior, switching tools becomes costly.

This checklist exists to flip the balance of power back to the buyer.

👉 Check also: How to Use AI in Automation Testing?

How to Use This Checklist to Eliminate Bad-Fit Vendors Early

This is not a scoring spreadsheet.

Use it as a risk filter:

- One unclear answer in a high-risk area (AI transparency, debugging, CI/CD) is often enough to walk away.

- Ask vendors to show, not describe, their answers during demos.

- Apply the same questions whether you’re a 5-person startup or a scaling SaaS team—only your tolerance for risk changes.

If a vendor can’t answer these confidently, imagine relying on them during a production incident.

What Does “AI” Actually Mean in This Tool?

Before features, pricing, or roadmaps, establish one thing: what is genuinely AI here? Many generative AI testing tools leverage advanced ai models and machine learning algorithms, making it essential to understand the role of ai in software testing when evaluating these solutions.

- What specific testing problems does AI solve in this tool?“ Speed” is not a problem statement.

- Which parts of the workflow are not AI-driven? Healthy tools still work without AI assistance.

- Is the AI deterministic or probabilistic? Can you expect the same outcome from the same input?

- Can testers explain AI-driven decisions to others? If you can’t explain it, you can’t trust it.

- Can AI features be disabled without breaking tests? This is essential for regulated environments and CI reliability.

If these answers feel vague, you’re dealing with marketing—not engineering.

Try stable automation with Bugbug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Test Creation & Readability at Scale

Fast test creation is impressive. Maintainable tests are valuable.

- How are tests created—recording, prompts, code, or hybrid? Note the importance of test case generation and AI powered test generation for automating and optimizing test creation.

- How readable are tests without AI assistance? Test scripts generated from user stories using prompt engineering can improve readability and maintainability. Imagine onboarding a new QA engineer mid-sprint.

- What happens when UI structure changes slightly? Minor DOM changes should not cause cascading failures.

- Does “self-healing” silently modify tests? Silent fixes are silent risks.

- Can humans review and approve AI-generated changes? Automation should assist judgment, not replace it.

Teams often discover too late that “no-code + AI” trades short-term speed for long-term opacity.

Maintenance, Flakiness & False Confidence

AI should reduce noise. Some tools simply hide it. Effective test maintenance, identification of redundant test cases, and test optimization are critical for long-term success with AI-powered testing tools.

- How does the tool detect flaky tests?

- Does AI suppress failures or explain root causes? Suppression feels good—until bugs escape.

- Can teams audit historical AI-driven changes?

- Does the system require retraining over time? Retraining is maintenance, even if vendors avoid the word.

- What happens when AI guesses wrong? This question matters more than how often it guesses right.

False confidence is more dangerous than slow feedback.

Debugging, Reporting & CI/CD Trust

Most test failures don’t happen during demos—they happen in CI, under time pressure.

- Can you clearly see why a test failed? Interpreting AI-generated test results and ensuring test accuracy are critical for understanding failures and building confidence in automated QA.

- Are logs, screenshots, and videos always accessible? The ability to efficiently run tests and validate results is essential for CI/CD trust and rapid feedback.

- Does AI-generated analysis add insight—or just labels?

- Can results be trusted in CI/CD pipelines? “It passed locally” is not an answer.

- How well does the tool fit developer workflows? If developers ignore results, automation fails.

The best reporting isn’t flashy—it’s actionable.

Integration, Workflow Fit & Team Scalability

AI tools rarely fail technically. They fail culturally. To ensure successful adoption and scalability, it’s crucial that AI-powered testing tools align with the needs of QA teams, development teams, and robust testing infrastructure.

- How does the tool integrate with CI/CD systems?

- Can tests run locally as well as in the cloud?

- How well does it support modern frontend frameworks?

- How steep is onboarding for new QA hires?

- **Can it scale without forcing process changes?**Tools should adapt to teams—not the other way around.

If a tool demands you “rethink QA” just to function, be cautious.

Cost, Lock-In & Long-Term Ownership Risks

This is where most teams under-question vendors.

- Is pricing tied to AI usage, executions, or seats?

- Can you export tests in a usable format?

- What happens if you downgrade or leave?

- Is support human-led when AI features fail?

AI-heavy tools often look affordable early—and expensive later.

How to Compare AI Test Automation Tools Without Falling for Hype

Comparison pages are useful—but only if you read them critically. It’s essential to align your tool selection with your overall testing strategy, optimize your testing efforts, and leverage AI to prioritize testing efforts for maximum impact.

Instead of asking “Which has more AI features?”, ask:

- Which tool exposes fewer unknowns?

- Which tool fails predictably?

- Which tool can I replace if needed?

This mindset is why many teams shortlist both AI-first platforms and simpler automation tools before deciding.

Where BugBug Fits as a Low-Risk Choice

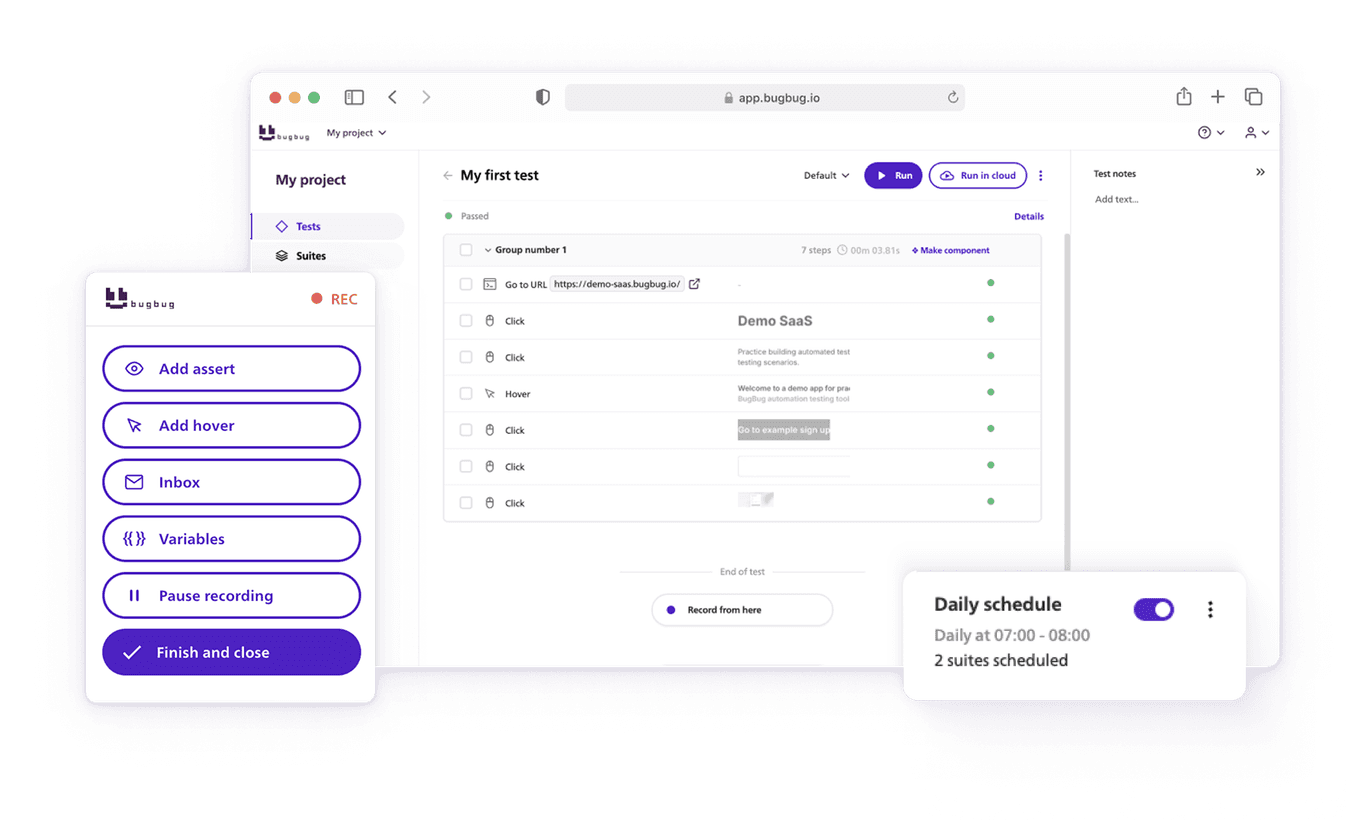

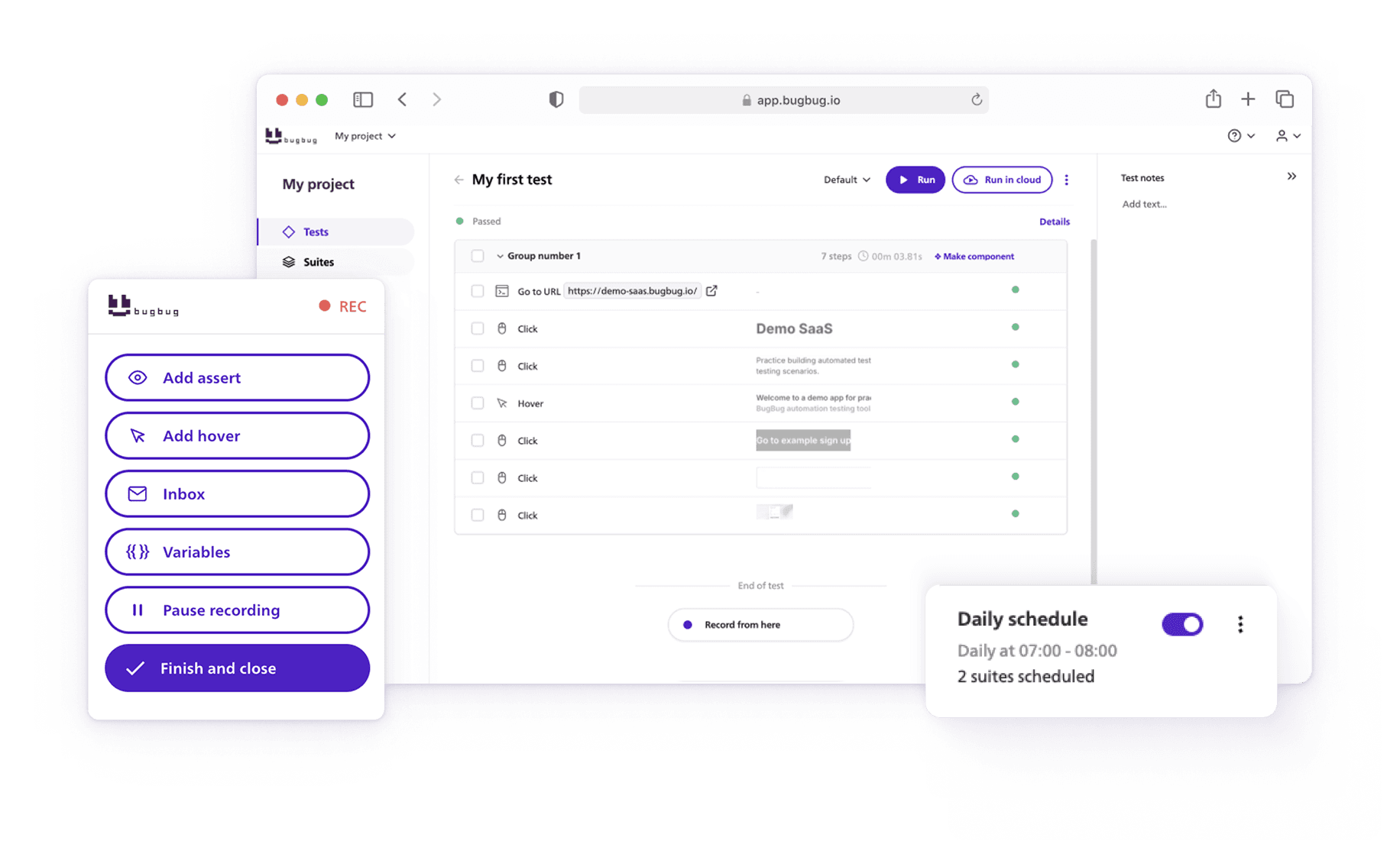

BugBug often appears in AI tool evaluations for a counterintuitive reason: it doesn’t force teams to bet everything on AI. BugBug supports codeless testing, enables rapid creation and execution of automated tests, and makes it easy to manage and generate test data for comprehensive coverage.

For teams evaluating AI tools for software testing, BugBug is frequently chosen when:

- Predictability matters more than autonomy

- Tests must stay readable and debuggable

- AI should assist testers, not override them

In many comparisons, BugBug becomes the “control option”—the tool teams trust to behave the same way today, tomorrow, and under CI pressure. That makes it a low-risk choice, especially for growing teams.

Try no-fuss testing

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Final Takeaway — AI Should Reduce Testing Risk, Not Increase It

At the end of the day, teams evaluating ai driven test automation are not looking to run more tests—they want thorough testing that actually improves confidence in software development. That means supporting realistic test scenarios, maintaining strong test coverage, and reliably executing a complete test suite across mobile and web applications, cross browser testing, and cross platform testing without turning QA into a black box. Whether you’re creating test cases, reusing test scripts, validating visual elements through visual testing, or extending automation testing into API testing, performance testing, and load testing, the real value lies in clarity and control. For many teams, especially those led by experienced test engineers, a codeless approach that helps automatically generate test cases, reuse test assets, and consistently execute tests—without over-reliance on opaque AI systems or experimental generative AI technologies—proves more sustainable.

Happy (automated) testing!