- What Does Test Coverage Really Measure?

- Different Types of Test Coverage (and Why They Matter)

- Real-World Examples of Test Coverage

- Test Coverage vs Code Coverage: What’s the Difference?

- What Test Coverage Actually Reveals (and What It Doesn’t)

- Increasing Coverage Without Overdoing It

- Test Coverage Metrics

- Test Coverage Techniques

- Why Test Coverage Is Worth Tracking

- But Don’t Chase 100% Coverage Blindly

- How to Improve Your Test Coverage (Without Burning Out Your Team)

- Beyond Code Coverage: Looking at the Full Picture

- Final Thoughts: What Test Coverage Teaches Us

Test coverage is a key measure of how thoroughly your application has been tested. Unlike code coverage, which focuses only on executed lines of code, test coverage looks more broadly at how well your tests address functionality, requirements, and overall system behavior.

In this guide, we’ll explain what test coverage is, why it matters, the main types to track, and how to improve it to ensure higher software quality.

What Does Test Coverage Really Measure?

In simple terms, test coverage shows how much of your application has been tested. It helps teams see whether their testing efforts have reached all the important parts of the software. That includes code, user flows, edge cases, and business requirements. Measuring coverage helps teams evaluate testing completeness and identify gaps in their test suites.

But it’s not just about hitting a high percentage. The real value comes from understanding what hasn’t been tested—and why. Teams use metrics to measure test coverage, which can be related to defect density as an indicator of software quality. This insight helps teams catch bugs earlier, improve test cases, and deliver higher-quality releases.

Different Types of Test Coverage (and Why They Matter)

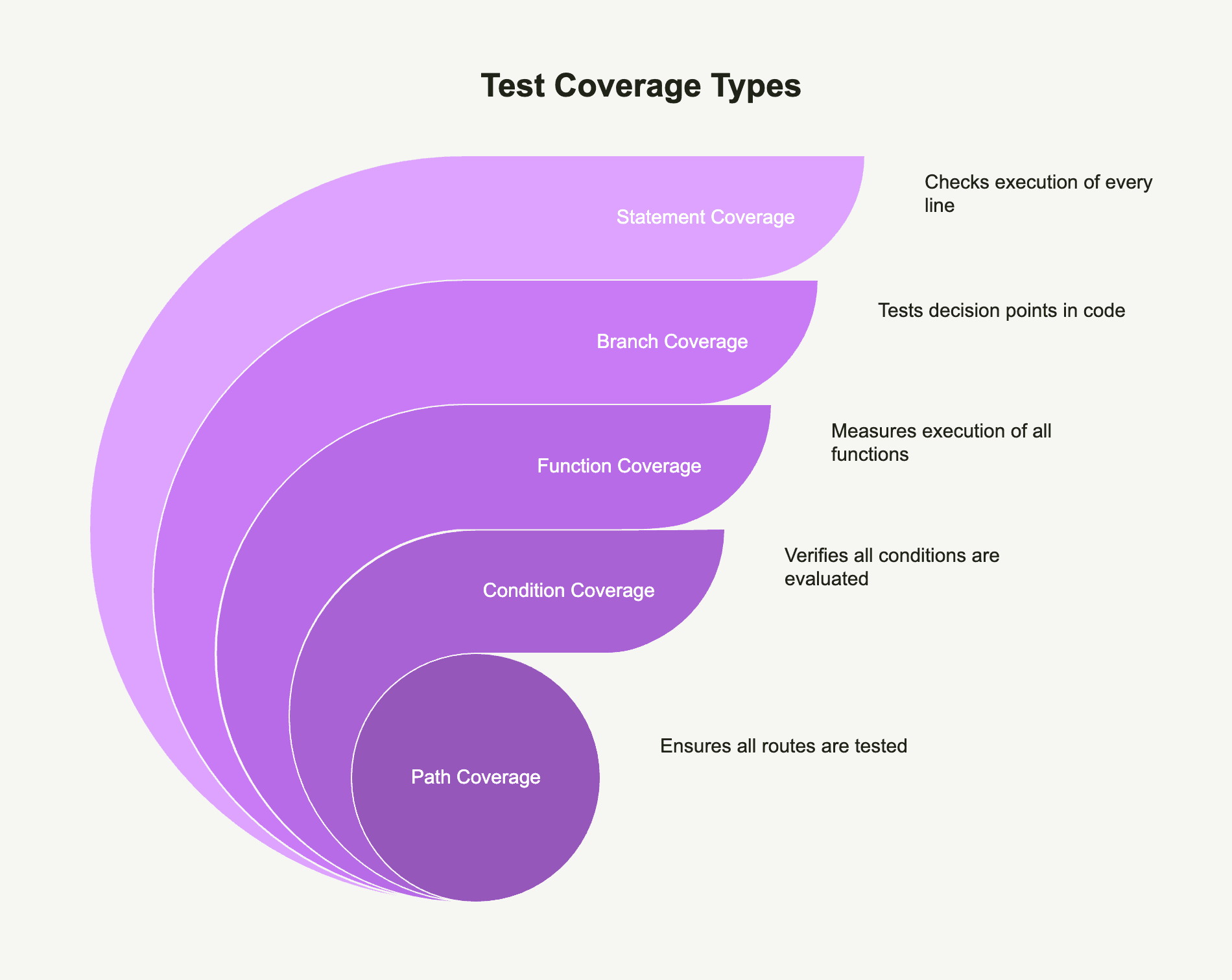

Let’s explore the most common test coverage types you’ll come across. Test cases are designed and executed to achieve these different types of coverage, ensuring that code, requirements, and functionality are thoroughly tested:

- Statement Coverage: Also called line coverage. It checks if every line of code has run at least once. Great for spotting missed logic in unit tests.

- Branch Coverage: Looks at decision points like if/else statements. It ensures both the “true” and “false” outcomes have been tested. This type digs deeper than just checking lines of code.

- Function Coverage: Measures whether all functions or methods were executed during testing. It’s useful to ensure all defined behaviors are tested.

- Condition Coverage: Verifies each condition in your code evaluates both to true and false at least once. Especially helpful for complex logic.

- Path Coverage: The most comprehensive. It tries to cover every possible route through your application. Best for critical systems, but often hard to achieve fully.

Comprehensive coverage is especially crucial during system testing, where the goal is to identify and fix defects across all system functionalities before deployment.

Using a combination of these helps ensure your software is tested from different angles. Selecting the right test coverage technique and applying various test coverage techniques is essential for identifying gaps and achieving thorough, high-quality testing.

Automate your tests for free

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Real-World Examples of Test Coverage

Understanding coverage gets easier with examples:

Unit Test Coverage

Out of 100 functions, 90 have been tested. That’s 90% coverage.

Function Coverage

If 45 out of 50 functions were tested, you’re at 90% function coverage.

Condition Coverage

If all 10 conditions in your code are tested for both true and false, you’re at 100% condition coverage.

Requirement Coverage

If your app has 30 features and you’ve tested 27, that’s 90% requirement coverage. Here, testing requirements define the specific criteria and scenarios—like handling negative numbers or different user interactions—that need to be covered to ensure comprehensive testing.

Risk Coverage

Found 10 high-risk areas? If you’ve tested all 10, that’s 100% risk coverage.

Product Coverage

If your tests cover UI, database, and backend functionality, you’re well on your way to strong product coverage.

Test execution is the process by which these coverage metrics are achieved and validated, ensuring that all testing requirements are addressed.

Test Coverage vs Code Coverage: What’s the Difference?

Both are valuable, and they work best when used together. Test coverage and code coverage are integral parts of the overall software testing process, helping teams ensure that both requirements and application code are thoroughly evaluated for quality and risk.

What Test Coverage Actually Reveals (and What It Doesn’t)

Coverage metrics help teams track testing progress and spot weak spots in their test suites. But they aren’t perfect. They can tell you how much code is touched—but not whether that code is meaningfully tested. And they rarely capture non functional requirements like performance or security.

This is why modern teams increasingly look at test coverage reports alongside failed tests, skipped tests, and patterns in test configuration. Used together, these indicators help identify gaps—places where test cases don’t align with business-critical behavior. Choosing the right coverage technique can help address those gaps by ensuring all relevant aspects of the software are systematically tested.

Remember: coverage doesn’t ensure quality. It only shows where your tests go—not how well they work once they get there. Comprehensive coverage involves testing different aspects of the application, such as compatibility across platforms, devices, and browsers.

Increasing Coverage Without Overdoing It

So how do you increase test coverage without creating bloated test suites or slowing down your pipeline?

One answer lies in smart test suite augmentation. This involves adding tests where needed—often targeting compatibility coverage or edge-case logic—without duplicating what’s already covered. Paired with test suite minimization, which strips out redundant or low-value test scripts to make the code lighter, you get leaner, faster cycles that still hit the right areas.

Test engineers often revisit their test scenarios, compare them to real user scenarios, and refine what gets tested based on risk, not just volume. Tools can help, but human judgment plays a big role in deciding where additional testing adds the most value.

Exploratory testing is also crucial, as it helps uncover additional areas for coverage that scripted tests might miss.

Test Coverage Metrics

Metrics help you measure and make decisions. When it comes to test coverage, here are the key ones to know:

- Line Coverage: Measures how many lines of code were executed during testing.

- Branch Coverage: Tracks whether each branch of conditional logic (like if-else) ran.

- Function Coverage: Counts how many functions or methods were called by tests.

- Condition Coverage: Measures whether each condition within a statement has been evaluated both to true and false.

- Path Coverage: Looks at the number of unique paths through the code that have been tested.

- Requirement Coverage: Tracks how many business or functional requirements are covered by tests.

- Risk Coverage: Shows how many high-risk areas have been tested compared to what was identified.

Automation test coverage is also tracked as part of these overall coverage metrics, especially in automated testing environments. It helps teams measure how much of the codebase is exercised by automated tests, supporting early defect detection and efficient test management.

Tracking these helps you uncover gaps, focus your efforts, and back up quality with data.

Test Coverage Techniques

You don’t need to cover everything manually. Here are some proven techniques that help improve test coverage:

- Boundary Value Analysis: Tests values at the edges of input ranges where bugs are most likely, using boundary value coverage as a specific technique to select test cases at or near these boundary points.

- Equivalence Partitioning: Groups inputs that should be treated the same to reduce redundant tests.

- Decision Table Testing: Helps handle multiple combinations of conditions.

- State Transition Testing: Useful when behavior changes based on different states (like logged in vs logged out).

- Pairwise Testing: Focuses on testing all possible combinations of pairs of input variables.

- Risk-Based Testing: Prioritizes tests around features or functions with the highest risk.

- Use Case Testing: Focuses on real-world user journeys to validate functionality.

Additionally, the white box testing technique is a test coverage technique that focuses on code coverage by executing all statements in the source code to identify defects and improve software quality.

Applying these techniques doesn’t just boost coverage—it helps create smarter, more effective tests.

Why Test Coverage Is Worth Tracking

High test coverage can help your team achieve good test coverage, which typically means covering at least 80% of your codebase with tests and ensuring that all critical features are tested. Good test coverage is important because it ensures software quality by reducing the risk of undetected bugs and validating that requirements are met.

- Spot Untested Code: Quickly identify what’s missing from your tests.

- Improve Code Quality: Better coverage often leads to fewer bugs.

- Catch Bugs Earlier: Especially in CI/CD pipelines.

- Validate Requirements: Helps ensure you’re building what was asked for.

- Prioritize Risks: Focus testing where failure would hurt the most.

- Simplify Refactoring: Confidence that nothing will break.

- Make Testing More Effective: Use metrics to guide improvements.

But Don’t Chase 100% Coverage Blindly

Let’s be clear: 100% test coverage doesn’t mean your software is bug-free. It just means every part of your app ran during tests. It says nothing about the quality or relevance of those tests.

Trying to hit 100% can sometimes backfire. It may lead to bloated test suites or shallow tests that just "check a box."

Instead, focus on meaningful coverage—tests that simulate how people really use your app, especially in high-impact areas.

How to Improve Your Test Coverage (Without Burning Out Your Team)

Here are proven ways to improve test coverage without adding unnecessary work:

- Start with a Coverage Report: Use tools to find untested parts of your code.

- Automate Regression Tests: Automated tests save time and increase consistency.

- Prioritize High-Risk Areas: Don’t try to test everything equally. Focus on what matters most.

- Write Better Test Cases: Think about edge cases, boundary values, and real usage.

- Use Pairwise Testing: It helps cover combinations of inputs with fewer tests.

- Review Tests Regularly: Keep them aligned with code changes.

- Test Across Devices and Browsers: This is key for web and mobile apps. Incorporate browser testing to ensure consistent user experiences across different browsers, and use compatibility testing to verify your software works correctly on various devices, operating systems, and environments.

- Leverage Code Reviews: Encourage devs to write tests alongside features.

- Train Your Team: Help them understand what good coverage looks like.

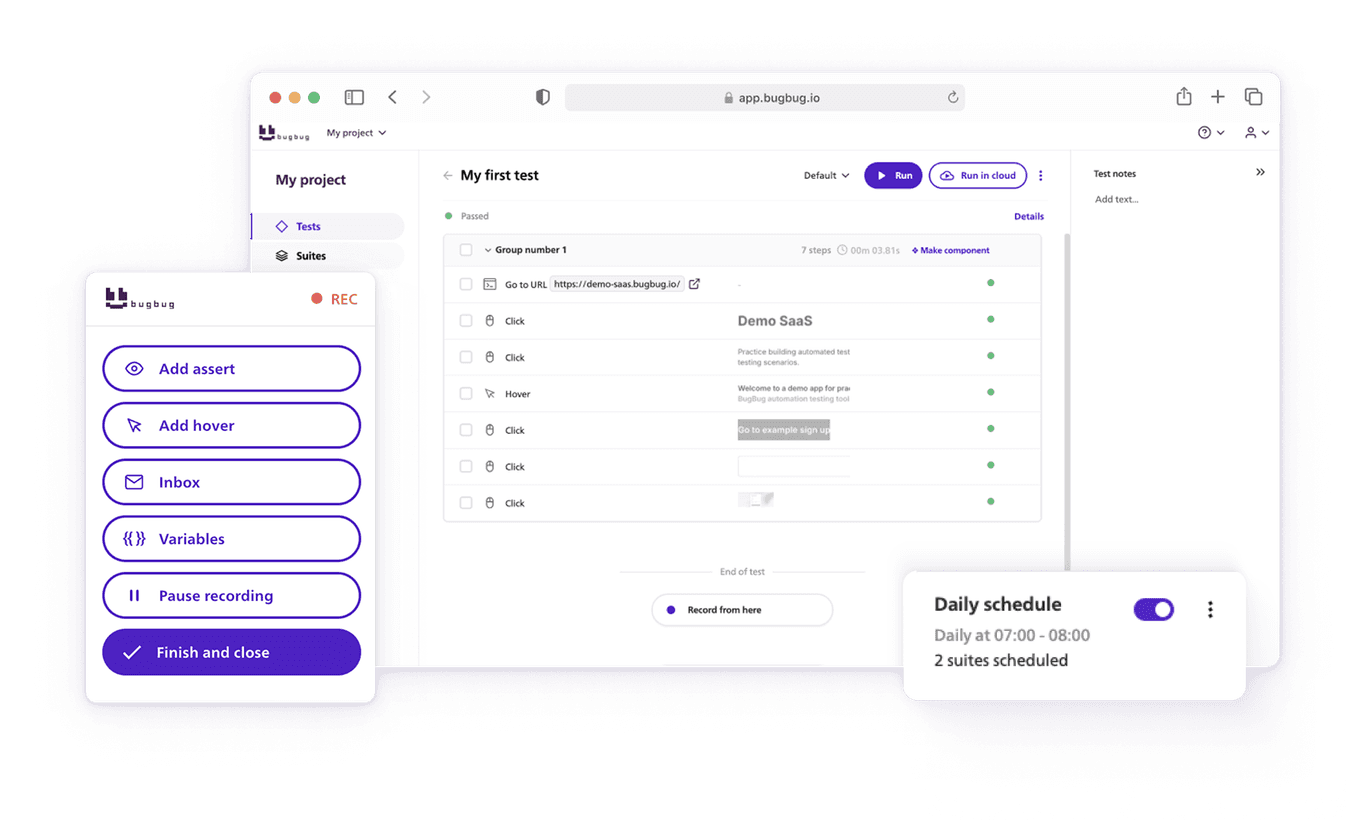

How Automation Tools Like BugBug Help

Test automation is a game-changer when it comes to scaling coverage. Tools like BugBug let you record and run browser tests without writing any code. This makes it easier for non-technical team members to contribute.

Automated tests are especially valuable in CI/CD workflows. They ensure your app works as expected after every change. And because they’re repeatable, they help maintain high coverage over time.

A Quick Case Study: GDi and BugBug

GDi had a small team but needed to test a large, complex app. Manual testing wasn’t cutting it.

By adopting BugBug, they were able to:

- Automate tests without coding

- Run tests remotely

- Reduce time spent on regression testing

The result? Their test team doubled productivity and no longer needed help from the R&D team for every release.

👉 Read the full case study

Popular Test Coverage Tools

Want to see how much of your app is being tested? Here are a few popular tools:

- JaCoCo (Java)

- Istanbul (JavaScript)

- Cobertura (Java)

- BugBug (no-code browser test automation)

These tools help you track:

- Line coverage

- Branch coverage

- Function coverage

- Cross-browser test coverage

They generate detailed reports that show what’s been tested—and what’s still at risk.

Beyond Code Coverage: Looking at the Full Picture

At some point, every team realizes that chasing 100% coverage is both unrealistic and, often, unproductive. What matters more is understanding what’s not covered—and why.

Are there areas of network testing, hardware testing, or mobile testing that have been overlooked? Do your test cases cover how the app behaves under load or in edge configurations? Are tests running on the full range of operating systems your users rely on?

The goal isn’t to test everything. It’s to test what matters—and do so intelligently. When testing teams regularly review what’s being tested and how that aligns with evolving risk, they create space for smoother testing cycles and smarter decisions. A well-defined testing process helps teams systematically align coverage with evolving risks and priorities, ensuring that test coverage remains relevant and effective.

Final Thoughts: What Test Coverage Teaches Us

Ask ten testing teams how they measure test coverage, and you’ll probably get ten different answers. Some see it purely as a metric—how much code is being tested. Others treat it as a broader indicator of how well the software testing process addresses risk, functionality, and reliability. The truth sits somewhere in the middle.

In practice, test coverage tells you how much of your application code, test scenarios, and real-world behavior your tests actually examine. But interpreting that data requires more than just reading a percentage on a report. Without context, high coverage might look impressive—but offer little insight into actual software quality.

Better test coverage doesn’t mean more tests. It means better test code, more focused test configuration, and smarter decisions around what gets automated and what still needs human eyes.

Keep asking: What are we missing? What are we assuming? And how can we use our coverage data—not just to check a box—but to guide better testing?

Happy (automated) testing!