QA strategy is more than just a roadmap for testing; it’s an integral part of ensuring that your software product meets user expectations for high-quality performance. The right QA strategy ensures that bugs are identified and resolved early, test automation is effectively employed, and the entire development team works in sync to deliver the best possible user experience.

But how do you create an effective QA strategy? Let's dive into the essentials of QA strategy building, explore why it’s crucial, and discuss common challenges, followed by 10 tips to ensure your QA process is on the right track.

Check also:

- What is a QA Strategy?

- Why is a QA Strategy Important?

- Biggest Challenges in Building a QA Strategy

- 10 Tips for Building a Successful QA Strategy

- Define Clear Goals for Quality Assurance

- Create a Comprehensive Test Plan

- Incorporate Test Automation Wisely

- Leverage Agile Methodologies

- Prioritize Testing Based on Risk

- Use a Test Management Tool

- Document Everything

- Foster Collaboration Between Developers and Testers

- Integrate Performance and Exploratory Testing

- Continuously Improve Your QA Strategy

- Conclusion

What is a QA Strategy?

A QA strategy is a document that outlines the approach, resources, tools, and techniques your QA team will use to ensure your software meets defined quality standards. It covers all aspects of testing, including test automation, manual testing, performance testing, and exploratory testing. It helps define the scope of the test cases, the types of testing, and the roles of team members involved.

Why is a QA Strategy Important?

A QA strategy ensures consistency, defines clear goals, and establishes procedures to minimize risks in the software development process. Without it, your team might overlook critical software quality issues, leading to:

- Increased bug count and regression failures

- Poor user experience

- Delayed delivery of the product

- Higher maintenance costs

A robust QA strategy allows your team to prevent defects, optimize test coverage, and ensure smooth integration of new features into the system.

Biggest Challenges in Building a QA Strategy

Developing a QA strategy from scratch is a complex undertaking that requires attention to detail, resource management, and a deep understanding of both testing methods and the development lifecycle. Building a strong foundation for automated testing, aligning the testing approach with quality goals, and ensuring a smooth integration testing process are essential steps in maintaining a robust QA strategy. Below are some of the key challenges you may face while developing an effective QA strategy and how to overcome them.

Balancing Manual and Automated Testing

One of the primary challenges in creating a successful quality assurance strategy is determining the right balance between manual testing and automated testing. While automation can significantly reduce repetitive tasks, manual testing is still essential for evaluating complex user interactions and exploratory testing. Over-relying on one at the expense of the other can lead to gaps in test coverage.

A good QA engineer knows when to implement automation and when to rely on manual efforts. This balance is crucial for maintaining a solid quality process that adapts to the software’s complexity and the testing needs of the project.

Creating the Right Testing Environment

Building a proper testing environment is another significant challenge. This environment should mimic real-world usage scenarios, allowing for effective end-to-end testing and ensuring the product quality meets user expectations. Whether you're focusing on API testing, integration testing, or performance testing, creating a reliable and scalable environment can make or break your QA efforts.

Many teams struggle with setting up and managing environments that meet the specific demands of different testing phases, including functional, regression, and performance tests. Using the right testing tools and testing services can help overcome these hurdles by simplifying setup and improving test automation efficiency.

Selecting the Right Testing Tools

Choosing the appropriate testing tools that align with your team’s expertise and the product’s technical requirements is vital for implementing a comprehensive quality assurance process. The tools should support various testing methods, including automated testing, API testing, and regression testing. Ensuring compatibility with your development lifecycle and enabling smooth integration with other systems is key to an efficient workflow.

Teams may also face challenges when it comes to investing in top-tier tools. However, underestimating the value of these tools may lead to technical debt, where quality is sacrificed due to poor testing infrastructure. To create a successful QA strategy, it's essential to invest in the right testing tools and solutions that align with your quality assurance practices.

Defining Clear QA Goals

Establishing clear QA goals is often overlooked, but it is one of the most critical components of a strong quality strategy. Without well-defined objectives, it becomes difficult for QA specialists to measure the effectiveness of their testing activities. Setting these goals early and ensuring they are aligned with overall quality initiatives and development lifecycle is essential for driving the QA efforts in the right direction.

A QA strategy document that includes your quality goals, testing phases, and testing approach will serve as a guide to keep the entire team aligned. This alignment will help foster a culture of quality across the organization, ensuring that everyone is working toward the same product quality objectives.

Managing the Agile Environment

Modern software engineers often work within agile principles, which prioritize flexibility and rapid development cycles. While agile has many advantages, it presents unique challenges for QA teams, especially in maintaining test automation and regression testing during continuous integration and delivery.

In an agile environment, testing is not a phase that happens after development; it's embedded throughout the development lifecycle. This shift requires QA to be integrated into the development process, and testing your software must be continuous. QA engineers need to collaborate closely with developers to ensure smooth integration of testing into the development process.

Ensuring Comprehensive Test Coverage

Achieving broad test coverage is one of the most challenging aspects of a QA strategy. Every use case and system integration needs to be tested, yet resources are often limited. Ensuring full coverage for functional testing, regression testing, exploratory testing, and performance testing requires meticulous planning.

The challenge here is not just about testing every scenario, but also about ensuring that you test the right scenarios. This means conducting thorough risk assessments to determine which parts of the software are most likely to fail and prioritizing them accordingly in the QA process.

10 Tips for Building a Successful QA Strategy

Define Clear Goals for Quality Assurance

Start by documenting your quality objectives. Understand what software quality assurance means for your product. Define how you'll measure the success of your QA process—is it the number of bugs fixed, test automation adoption, or user satisfaction?

Create a Comprehensive Test Plan

Your test plan should outline your strategy, methodologies, types of testing, timelines, and resources. The plan must include a mix of manual testing, test automation, exploratory testing, and performance testing to ensure thorough test coverage.

Incorporate Test Automation Wisely

Automating repetitive tasks like regression testing and functional checks can save significant time. Identify the areas in your test strategy where automation will bring the most ROI, and use the appropriate tools to streamline these processes.

Leverage Agile Methodologies

Incorporate agile practices in your QA process to ensure continuous testing throughout the development process. Agile allows you to collaborate closely with developers and testers, enabling early detection of defects and faster feedback loops.

Prioritize Testing Based on Risk

When building your test strategy, focus on areas that pose the highest risk to software quality and user experience. Concentrate on critical features that directly impact users, and ensure you have a solid regression and functional testing plan in place.

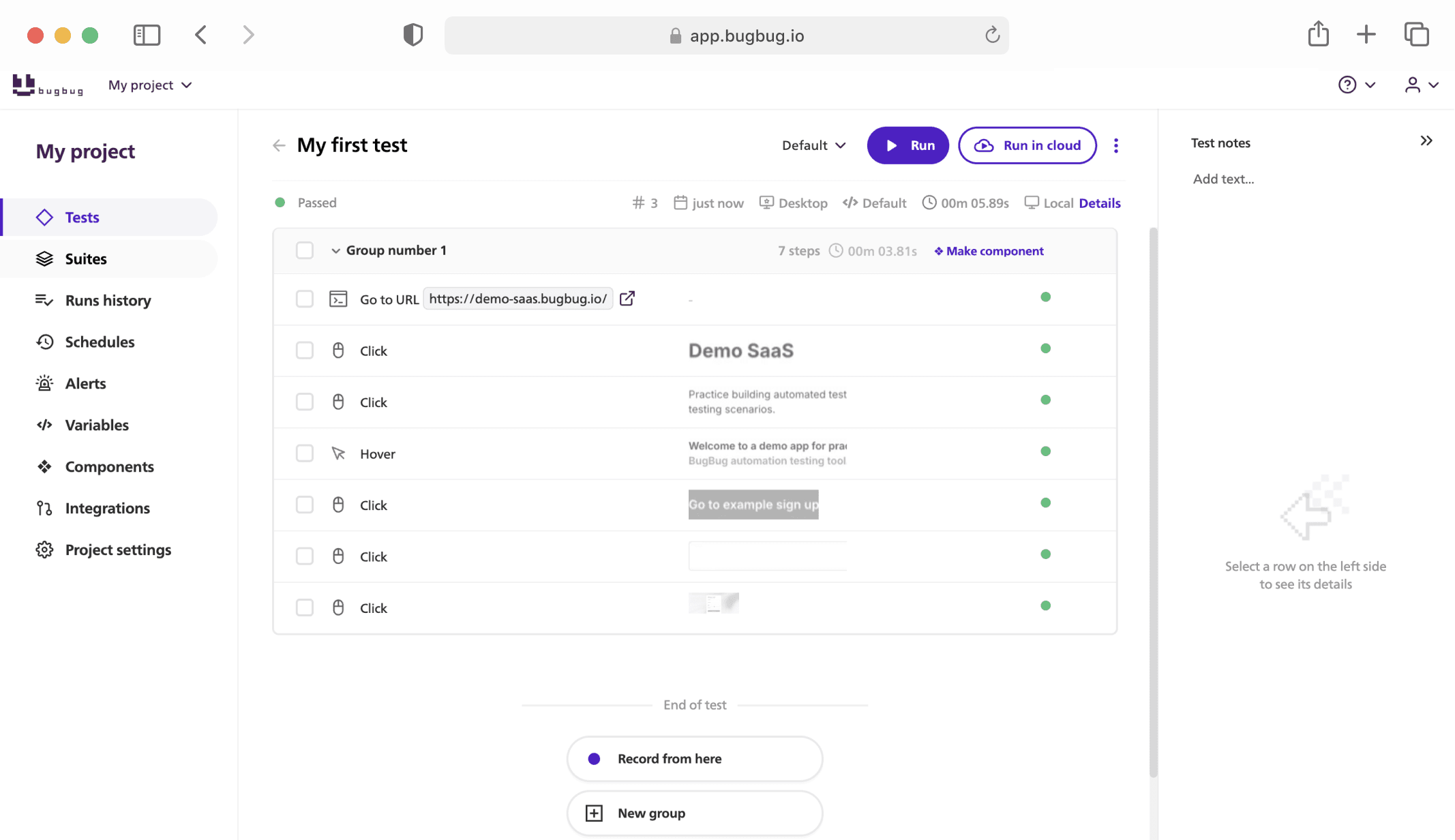

Use a Test Management Tool

A good test management tool will help you track and organize your QA test efforts, manage test cases, and document results effectively. It’s a must-have for keeping your QA strategy on track and ensuring that nothing falls through the cracks.

Document Everything

Documentation is critical to an effective QA strategy. It serves as the blueprint for your test procedures, tools, frameworks, and outcomes. Comprehensive documentation helps the team stay aligned on goals and allows new team members to onboard quickly.

Foster Collaboration Between Developers and Testers

QA should not be an isolated activity. Encourage developers to be involved in the testing process, whether by writing unit tests or contributing to test automation. This collaboration helps catch bugs early and improves overall software quality.

Integrate Performance and Exploratory Testing

Don’t limit yourself to functional testing alone. Include performance testing to ensure your software can handle load and stress. At the same time, engage in exploratory testing to discover edge cases that automated scripts might miss.

Continuously Improve Your QA Strategy

As your software development progresses, continually revisit and refine your QA strategy. Use feedback from past projects, adjust to changes in technology or team structure, and ensure your strategy evolves to meet the needs of the business and users.

Conclusion

Building a strong QA strategy is essential for delivering top-notch quality software and ensuring user satisfaction. To achieve this, your strategy must encompass every aspect of testing, from manual and automated testing to performance and integration tests. Testing is crucial at every phase of the development process, and creating a QA and test framework that is robust, flexible, and scalable is the cornerstone of success.

Aa Effective Quality Assurance Approach

An effective quality assurance approach requires choosing the right tools, defining clear objectives, and ensuring your team has the resources and expertise to execute. The strategy requires continuous improvement, where you consistently refine your processes, tools, and overall QA efforts. Investing in quality assurance is not just about testing your software but also about creating a culture of quality across the entire organization.

To implement your QA strategy effectively, focus on building a strong QA team, fostering collaboration, and ensuring that your strategy covers all necessary testing phases. The strategy is key to meeting your quality goals and delivering an exceptional user experience. Remember, effective testing is at the heart of ensuring a high-quality product, and a well-crafted QA strategy is the foundation for achieving long-term success.

Happy (automated) testing!