🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

- 🎯 TL;DR — When To Run Regression Tests?

- Why Timing Matters

- Quick Refresher: What Regression Testing Is (and Isn’t)

- Exactly When to Perform Regression Testing

- 1) After Every Feature or Code Change

- 2) Before Major Releases or Deployments

- 3) After Bug Fixes or Hotfixes

- 4) After Dependency or Runtime Updates

- 5) After Infrastructure / CI/CD / Environment Changes

- 6) After Database or Data Model Changes

- 7) After Integration or Third-Party Changes

- 8) After UX, Layout, or Content Updates

- 9) After Security or Compliance Updates

- 10) Around Operational or Seasonal Events

- 11) On a Regular Schedule (Continuous Regression)

- Quick Planner: Trigger → Suggested Suite

- Keeping Regression Efficient (and Sane)

- Automating Regression the Smart Way (with BugBug)

- Final Takeaway

🎯 TL;DR — When To Run Regression Tests?

✅ After new features, refactors, merges, or code changes

✅ Before releases, deployments, or migrations

✅ After bug fixes, hotfixes, or incident recoveries

✅ After dependency, framework, API, or environment updates

✅ After database, schema, or data-related changes

✅ After UI, content, localization, or accessibility updates

✅ After security, session, or permission model changes

✅ Before high-traffic events or seasonal releases

✅ On a regular (automated) schedule

Why Timing Matters

Regression testing is the safety net that ensures new work doesn’t break what already works. In agile/CI/CD, timing is as crucial as coverage:

- Too early: you miss issues introduced later in the cycle.

- Too late: you scramble under release pressure.

Aim for event-driven runs plus a regular cadence so you catch regressions continuously, not just at the end.

Quick Refresher: What Regression Testing Is (and Isn’t)

- Regression testing verifies that existing functionality still works after changes.

- It’s different from retesting, which verifies that a specific fix resolved a specific defect.

- Think of it as an ongoing guardrail for critical flows (login, checkout, onboarding, billing, etc.).

👉 Also check: Essential Web Application Testing Tools

Exactly When to Perform Regression Testing

Use this as a practical checklist for your QA cycle.

1) After Every Feature or Code Change

- Merged feature branches or refactors

- UI/layout/CSS tweaks; component prop/state changes

- Data handling, async logic, error handling updates

2) Before Major Releases or Deployments

- New app versions; sprint/milestone drops

- Infrastructure migrations

- Customer-facing changes (pricing, checkout, onboarding)

3) After Bug Fixes or Hotfixes

- Any functional fix (watch for nearby breakage)

- Production hotfixes, rollbacks, emergency patches

- Post-incident verification (recovery validated)

4) After Dependency or Runtime Updates

- Framework/library upgrades (React, Angular, Django, etc.)

- API version bumps; SDK updates

- Browser/OS updates; Node/Java/Python runtime changes

- Container base image updates (e.g., OpenSSL, libc)

5) After Infrastructure / CI/CD / Environment Changes

- Pipeline/test runner changes; env variable tweaks

- Deployment/scaling, cache/CDN, LB rule updates

- Queue/broker/message bus configuration

- Monitoring/APM tooling additions

6) After Database or Data Model Changes

- Schema migrations; new indexes/constraints

- ETL/import/export jobs

- Search indexing or caching logic changes

- Locale/currency/date formatting rules

- New reporting/analytics queries

7) After Integration or Third-Party Changes

- Payment gateways; auth/SSO providers

- API contracts/schemas

- Email/SMS/notification providers

- Rate limiting, firewall/WAF rules

- Tracking/analytics scripts

8) After UX, Layout, or Content Updates

- CSS/HTML/responsive breakpoints

- Accessibility/ARIA improvements

- SPA routing/navigation changes

- Localization (new languages/RTL)

- A/B tests or CMS template edits

9) After Security or Compliance Updates

- Session/cookie policy changes (SameSite/Secure)

- Role/permission model changes

- Auth libraries, encryption, consent/privacy banners

- Security patches and dependency upgrades

10) Around Operational or Seasonal Events

- Post-incident/data restoration checks

- Before peak traffic (sales, launches)

- Daylight savings/timezone transitions

- New tenant onboarding (multi-tenant)

- Before contract renewals/reporting cycles

11) On a Regular Schedule (Continuous Regression)

- Nightly/weekly runs to catch slow-moving/env issues

- Prevents last-minute “testing crunch” before release

Quick Planner: Trigger → Suggested Suite

| Trigger | What to Run |

|---|---|

| Feature branch merged | Targeted regression for impacted area + smoke of core flows |

| Pre-release / pre-deployment | Full regression of critical paths + cross-browser sanity |

| Hotfix in prod | Smoke + focused regression around impacted domain |

| Framework/API/dependency update | Broad regression of affected surfaces + integration/API checks |

| DB/schema change | Data-heavy flows, reporting, caching/indexing, i18n formatting |

| Security/session/permissions change | AuthN/AuthZ flows, session expiry, role-based access |

| UX/layout/content update | Responsive/UI journeys, forms/modals, a11y validations |

| High-traffic event imminent | Load-sensitive critical paths + payments and notifications |

| Weekly/nightly cadence | Rotating subsets + flaky test watchlist |

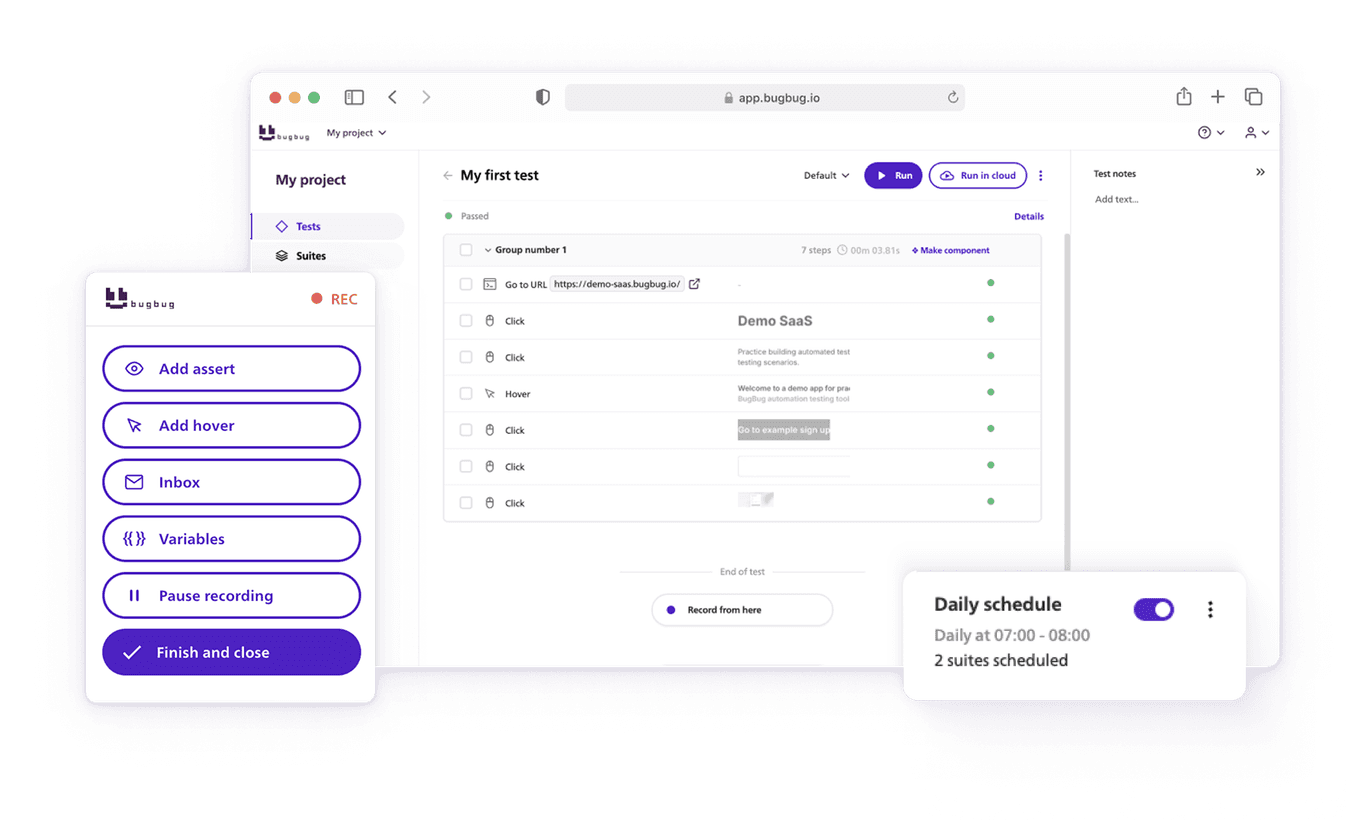

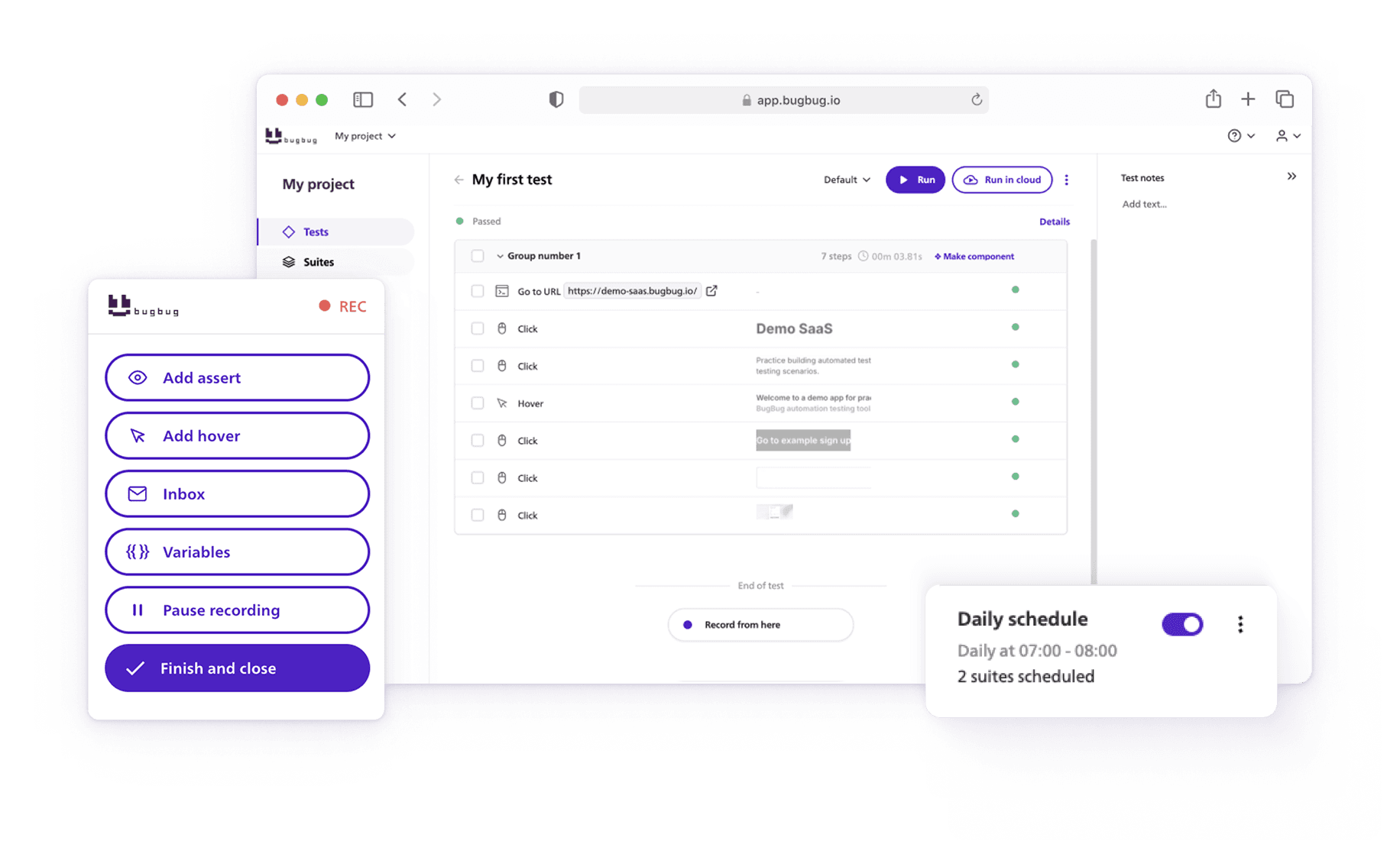

Scale test automation without code

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Keeping Regression Efficient (and Sane)

- Scope smartly: Automate critical, stable flows first; expand by risk.

- Tier your suites: Smoke (minutes) → Critical regression (short) → Full regression (longer, scheduled).

- Fight flakiness: Prefer robust selectors, explicit waits, test-data isolation, and deterministic environments.

- Tag tests: By component/feature/risk so you can trigger just what’s needed per change.

- Measure & prune: Track failures/MTTR; delete or fix chronic flakes; merge overlapping tests.

Automating Regression the Smart Way (with BugBug)

Manual regression is repetitive and slow. Codeless automation makes it continuous and maintainable:

- Record & edit tests visually in the browser.

- Schedule runs after deployments or on a cadence.

- Integrate with CI/CD or trigger via API/webhooks.

- Debug quickly with Edit & Rewind to stabilize suites.

Result: reliable, low-maintenance regression that keeps pace with your delivery speed.

Final Takeaway

Regression testing isn’t about testing more—it’s about testing at the right moments. Combine event-driven triggers with a regular automated cadence to ship confidently, week after week. Tools like BugBug help you get there without drowning in setup or upkeep.

Sample Tagging Strategy

-

@smoke: login, signup, checkout, payments, dashboard load -

@critical: search, filters, add-to-cart, profile update, invoices -

@integration: webhooks, third-party APIs, auth/SSO -

@data: migrations, reports, exports, localization -

@security: roles/permissions, session/cookies

Happy (automated) testing