🤖 Summarize this article with AI:

💬 ChatGPT 🔍 Perplexity 💥 Claude 🐦 Grok 🔮 Google AI Mode

Software testing is no longer a final checkpoint—it’s the backbone of product reliability in an era of continuous delivery and rapid change. In 2025, quality assurance is facing new challenges: accelerated release cycles, AI-driven code generation, and complex distributed architectures. Testing must adapt in such a way that strategies are systematically designed to address these new software testing challenges. The old approaches to testing—static suites, manual regression passes, and disjointed test ownership—can’t keep up with this pace.

🎯 TL;DR - Effective Testing Strategies

- Testing is continuous, not a final step. In 2026, QA is integrated across all stages of development—testing happens before, during, and after deployment.

- Shift-left and shift-right are the new normal. Early-stage automation and real-time production monitoring ensure faster, safer releases.

- Automation is strategic, not absolute. Teams focus on automating stable, high-impact tests while preserving human insight for exploratory and usability testing.

- Data and risk drive modern QA. Metrics like defect detection rate, MTTD/MTTF, and automation stability replace outdated “coverage” vanity metrics.

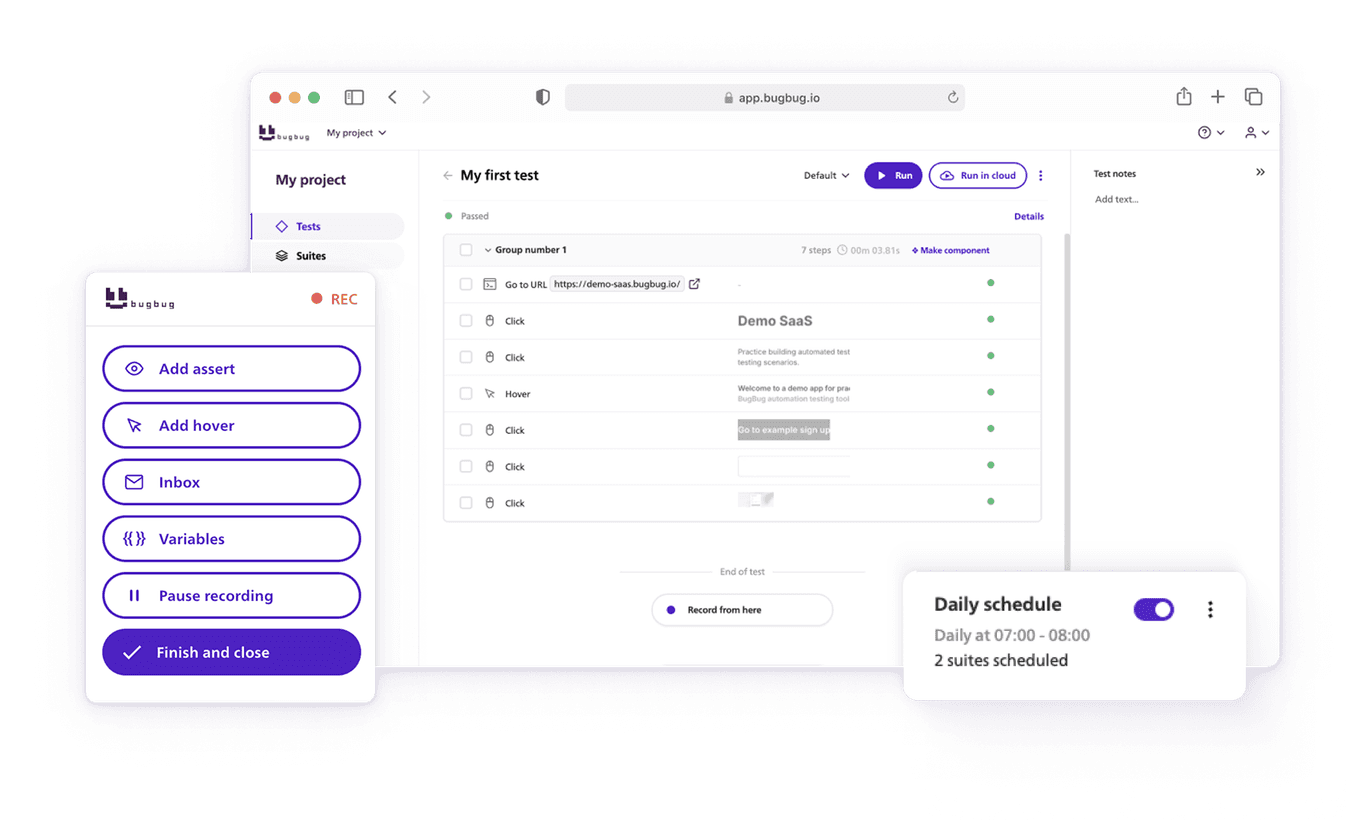

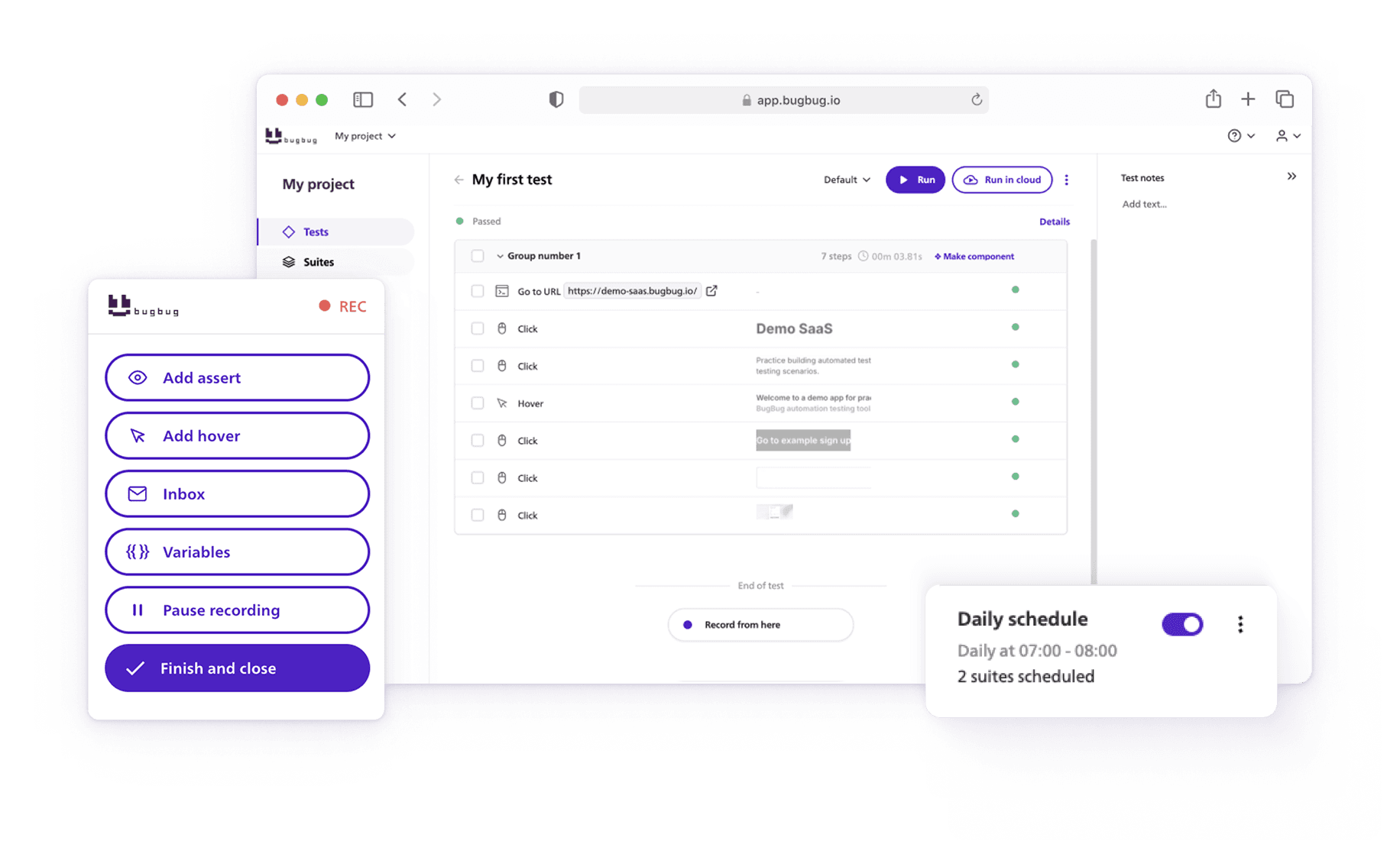

- Lightweight tools enable continuous testing. Low-code automation platforms like BugBug help teams maintain agility, scalability, and sustainable test pipelines.

👉 Also check: Test Plan vs Test Strategy

Why Software Testing Strategies Must Evolve in 2025

By the end of this article, you’ll understand what defines a future-proof testing strategy, which techniques are worth prioritizing in 2025, and how to evolve your QA process to match the speed of software development. A well-structured test strategy is essential as a foundation for adapting to the challenges of 2025, ensuring your team can respond effectively to rapid changes and maintain high product quality.

The Shift in Software Testing Priorities

Software testing strategies are evolving alongside how teams build and release products. The industry has moved past “QA as a phase.” In 2025, quality is everyone’s responsibility, and testing happens continuously—before, during, and after deployment.

Here are the major shifts shaping modern testing strategies:

- From QA silos to cross-functional ownership. Developers, testers, and PMs collaborate directly on quality goals. This reduces communication bottlenecks and ensures faster feedback. Teams must be prepared for rapid changes and new testing demands, making readiness a key part of successful test strategies.

- From late-stage testing to shift-left and shift-right approaches. Testing begins earlier in development (shift-left) through unit and API checks, and extends into production monitoring (shift-right) to validate real-world performance and user experience.

- From quantity to quality of automation. Teams are moving away from automating everything to automating what’s stable, repeatable, and high-impact. The goal isn’t just more tests—it’s reliable, meaningful ones.

- From manual QA to human insight. Manual testing isn’t disappearing; it’s becoming more focused. Exploratory testing, usability reviews, and creative problem discovery still rely on human intuition.

Continuous testing, observability, and smarter automation are the cornerstones of this evolution. For lean teams, the priority is building a pipeline that delivers fast, trustworthy feedback without burning engineering hours on test maintenance. That’s why low-code tools like BugBug are gaining traction—they simplify test automation while maintaining flexibility for developers.

👉 Check also: How to Create a Test Plan?

Core Testing Strategies to Adopt in 2026

Building an effective testing strategy in 2026 means more than choosing the right tools — it’s about creating a balanced, data-driven process that aligns testing with business goals. The best QA teams don’t chase automation coverage for its own sake. They focus on risk reduction, speed, and feedback quality.

Thorough test preparation is essential for the success of modern testing strategies, helping teams approach testing with confidence and readiness.

Below are the strategies that define modern, high-performing testing organizations.

Automation-First, but Not Automation-Only

Automation remains the backbone of fast delivery. But 2026 teams are learning to automate strategically — focusing on stable, repetitive user flows and critical business logic.

Regression testing, smoke testing, and sanity checks are prime candidates for automation, freeing testers to focus on creative, exploratory work.

The challenge is maintaining automation at scale. Frameworks often become brittle, slowing teams down. That’s why many startups and QA teams are turning to codeless test automation platforms like BugBug. They eliminate boilerplate scripting, reduce maintenance overhead, and integrate seamlessly with CI/CD pipelines — making “continuous testing” realistic even for small teams.

Scale test automation without code

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Exploratory and Risk-Based Testing

Exploratory testing remains one of the most valuable QA activities — especially as AI and automation handle more of the repetitive load. In 2025, testers are pairing risk-based prioritization with exploratory sessions: identifying the highest-risk user paths and targeting them for manual exploration.

Building confidence in testers' abilities is crucial for effective exploratory testing, as it empowers them to trust their instincts and judgment, leading to better identification of hidden usability issues, flaky behavior, or integration bugs that automated scripts might miss. The result is a smarter allocation of time — automation ensures consistency, while exploratory testing ensures insight.

Performance and Security as Continuous Practices

Performance and security testing are no longer optional checkpoints. They are embedded into every sprint, using lightweight, continuous tools that simulate load, track resource consumption, and detect regressions before they hit production.

For web applications, monitoring memory leaks, load times, and response degradation during each build is now standard. Likewise, automated security scans in CI/CD pipelines ensure early detection of vulnerabilities before deployment.

Data-Driven Test Optimization

The rise of analytics in QA means teams can now track which tests matter most.

By analyzing metrics like test flakiness, defect density, and user journey frequency, testers can identify gaps and optimize coverage based on actual risk and usage patterns.

In practice, this means pruning redundant tests, focusing automation on high-value areas, and leveraging dashboards (such as those available in BugBug) to visualize testing trends and bottlenecks.

👉 Check also: Testing Strategies for Startups

What Are The Common Pitfalls to Avoid

Building effective test taking strategies for software testing requires discipline, preparation, and self-awareness — just like preparing for a major exam. Just as successful students review the entire test before starting to understand the full scope and plan their approach, teams should review the complete testing requirements before execution.

Teams that rush through the testing process without structure often fall into the same traps students do when facing standardized tests: overconfidence, poor time management, and lack of focus on what truly matters.

Here are the most common mistakes that derail testing efforts — and how to avoid them.

Skipping the Preparation Phase

Just as good test taking skills start with test prep, effective QA begins with a solid understanding of your functional requirements, test location, and end users. Jumping straight into automation without clear objectives or study-like preparation leads to confusion and wasted effort later.

Teams should:

- Review study guides (in QA, this means specs and acceptance criteria).

- Complete and review homework assignments (such as previous test cases or related documentation) to build strong study skills for effective test preparation.

- Practice with sample or practice tests (trial runs of scripts).

- Identify what “success” looks like before the test starts.

Skipping this phase is like arriving at an exam without knowing the types of tests you’ll face.

Rushing Through the Testing Process

Whether you’re taking a multiple choice exam or validating a software application, speed without precision hurts accuracy. In both cases, it’s important to pay close attention to details. A missed login button test case or an unchecked box on an answer sheet can completely skew results.

Give your team a few minutes to double-check before marking a test question as complete. Make sure every question or case has been fully answered before submission to avoid missing critical items. A well-paced approach allows time to review answers, fix errors, and confirm the correct answer — or in QA terms, confirm the expected behavior.

💡 How to test Ecommerce websites?

Treating All Tests the Same

Not all exams are multiple choice questions or essay questions — and not all QA activities are performance tests or functional ones.

Each requires a different testing approach.

For example:

- Use automation for repetitive, standardized tests like regression or smoke checks.

- When answering multiple choice questions, focus on careful reading and elimination strategies, which differs from the open-ended thinking needed for essay or short answer questions.

- Apply exploratory testing for short answer questions — where human intuition identifies gaps automation can’t.

- Run performance tests to validate scalability under load, like the essay portion of QA — requiring deeper thought and thorough testing.

Understanding the different types of tests ensures your testing strategy mirrors real user behavior instead of checking boxes mechanically.

Ignoring Stress and Burnout

Just like students experience test anxiety, QA engineers can feel pressure during long release cycles or when dealing with flaky tests.

Fatigue leads to missed bugs, skipped cases, and poor decisions.

Encourage testers to reduce stress and relieve stress throughout the cycle:

- Take a few minutes to rest during long runs.

- Drink plenty of water and maintain focus.

- Replace negative thoughts (“this build always fails”) with positive ones (“this is the next test we’ll stabilize”).

Healthy teams deliver higher-quality results — because mental clarity matters as much as technical skill.

Neglecting Review and Feedback

Strong testers, like strong students, always double check their work.

After each test day (or sprint), teams should review failed cases, analyze logs, and determine whether issues stem from the app or the environment.

It’s like checking an answer blank or answer sheet before submission — one last scan can catch a missing case or incorrect configuration.

Even partial mistakes, if spotted early, can yield partial credit — saving hours of debugging and rework later.

👉 Check also: Codeless Automation Testing Tools

Focusing on Output, Not Understanding

Some testers fall into the trap of “finishing tests” rather than understanding concepts behind them. Just as students who memorize without comprehension struggle on pop quizzes, testers who focus only on coverage metrics miss relevant information about product behavior and overall user experience.

To prepare effectively:

- Study your app like a subject.

- Connect individual units (modules, APIs, UI components) to their role in the user journey.

- Write down key facts, data, or explanations from each test to reinforce understanding and retention.

- Use each run to explain not just what failed, but why.

When QA understands the reasoning behind each exam question — or test case — the entire process becomes more meaningful and resilient.

Metrics That Matter in 2025

As QA practices mature, what teams choose to measure says everything about their testing strategy.

In 2025, successful testing organizations are moving beyond vanity qa metrics like “number of tests executed” or “total coverage.” Instead, they’re focusing on metrics that reflect real-world product reliability, team efficiency, and user experience.

The goal is no longer to prove testing is happening — it’s to prove testing is working.

Test Effectiveness over Test Volume

A high number of test cases doesn’t necessarily mean better quality.

Modern QA teams focus on test effectiveness — how many defects are caught early, how often tests fail for valid reasons, and whether those failures lead to meaningful fixes.

To measure test effectiveness:

- Track defect detection rate.

- Monitor false positive frequency.

- Evaluate defect escape rate.

A smaller, more reliable suite of high-quality tests is far more valuable than a bloated one that slows down pipelines.

Mean Time to Detect (MTTD) and Mean Time to Fix (MTTF)

Speed matters.

In a continuous delivery environment, the faster a defect is identified and fixed, the lower the cost of failure.

These two metrics — MTTD and MTTF — capture how effectively your testing feedback loop performs.

Tools like BugBug, with its quick, visual debugging and integrations with CI systems, help QA teams cut MTTD by surfacing issues immediately after each run.

Automation Stability and Maintenance Cost

Automated testing is only valuable if it’s stable and sustainable.

That’s why teams are increasingly tracking:

- Flakiness rate

- Maintenance time per test

- Execution reliability

Low-code tools like BugBug drastically reduce maintenance costs because visual tests are easier to update when the UI changes — helping teams achieve sustainable automation at scale.

Try stable automation with Bugbug

Test easier than ever with BugBug test recorder. Faster than coding. Free forever.

Get started

Quality Metrics that Link to Business Impact

Testing isn’t just a technical process — it’s a business enabler.

Forward-thinking QA teams are correlating testing outcomes with user and product KPIs, such as:

- Conversion rates

- Crash-free sessions

- Customer support volume

- Retention or churn

By linking quality data to business performance, QA earns a stronger voice in product decisions.

Continuous Measurement and Adaptation

No metric stays relevant forever.

As your product and architecture evolve, so should your quality KPIs.

Teams that succeed in 2025 treat metrics as living systems — reviewed, refined, and shared transparently across roles.

Conclusion

Modern QA teams must think strategically about testing. The focus is shifting from running more tests to running the right tests at the right time. Effective testing strategies now blend automation, exploratory analysis, and observability into a unified process that supports fast iteration without compromising quality.

Startups and small teams, in particular, are rethinking their tooling choices. They need affordable, low-maintenance automation that integrates easily into CI/CD pipelines and doesn’t require deep coding expertise. This is where modern, lightweight tools like BugBug make a difference—allowing testers and developers alike to record, run, and maintain browser tests effortlessly.

Happy (automated) testing